Head in the Clouds: Google Compute

Sep 12 2018

By: Christopher Maddalena • 7 min read

Introduction

This article is a companion piece for this primer:

Head in the Clouds – Christopher Maddalena – Medium

In the cloud space, there are three major providers at this time: Amazon Web Services (AWS), Microsoft Azure, and…

posts.specterops.io

IP Address Ranges

Google has, by far, the most convoluted solution for fetching Compute IP addresses, which is detailed here in the Google Compute Engine documentation:

Google Compute Engine FAQ | Compute Engine Documentation | Google Cloud

Both persistent disks and Google Cloud Storage can both be used to store files but are very different offerings. Google…

cloud.google.com

To get the IP address ranges, one must first fetch the TXT DNS record for _cloud-netblocks.googleusercontent.com, like so:

nslookup -q=TXT _cloud-netblocks.googleusercontent.com 8.8.8.8

Server: 8.8.8.8

Address: 8.8.8.8#53Non-authoritative answer:

_cloud-netblocks.googleusercontent.com text = “v=spf1 include:_cloud-netblocks1.googleusercontent.com include:_cloud-netblocks2.googleusercontent.com include:_cloud-netblocks3.googleusercontent.com include:_cloud-netblocks4.googleusercontent.com include:_cloud-netblocks5.googleusercontent.com ?all”

The returned entries in the SPF record for the “_cloud-netblocks#” names contain the information about Google Compute’s current IP address ranges. To get the list, additional lookups must be performed one at a time for each _cloud-netblocks hostname:

nslookup -q=TXT _cloud-netblocks1.googleusercontent.com 8.8.8.8

Server: 8.8.8.8

Address: 8.8.8.8#53Non-authoritative answer:

_cloud-netblocks1.googleusercontent.com text = “v=spf1 include:_cloud-netblocks6.googleusercontent.com ip4:8.34.208.0/20 ip4:8.35.192.0/21 ip4:8.35.200.0/23 ip4:108.59.80.0/20 ip4:108.170.192.0/20 ip4:108.170.208.0/21 ip4:108.170.216.0/22 ip4:108.170.220.0/23 ip4:108.170.222.0/24 ip4:35.224.0.0/13 ?all”

Making Use of the IP Addresses

An up-to-date list of these IP addresses is useful for identifying assets hosted in a Google Compute environment. Any domain or subdomain that points back to an IP address in the list will lead to a storage bucket or Compute Engine server.

Maintaining an Updated Master List

The collection of these IP addresses has been automated in the following script:

The script fetches the latests IP address ranges used by each provider and then outputs one list in a CloudIPs.txt file. Each range is on a new line following a header naming the service, e.g. “# Google Compute Engine.”

Storage: Compute Buckets

Google’s buckets work in a similar manner to AWS buckets. The address scheme looks like this:

https://storage.googleapis.com/cmaddy/

Like AWS, Compute buckets can be discovered and enumerated using brute force by guessing a bucket’s name and checking the XML response.

Bucket Names

Google allows users to name buckets using DNS-compliant names, but if the name includes a dot it must be a valid domain name or subdomain and the user must be able to prove they own the domain. This detailed in Google’s documentation for “Domain-Named Bucket Verification.”

Domain-Named Bucket Verification | Cloud Storage Documentation | Google Cloud

If your project intends to have a domain-named bucket, the team member creating the bucket must demonstrate that they…

cloud.google.com

Virtual Servers: Compute Engine

Google’s virtual machine offering is called Compute Engine. Google’s documentation for the Compute Engine metadata service can be found here:

Storing and Retrieving Instance Metadata | Compute Engine Documentation | Google Cloud

Every instance stores its metadata on a metadata server. You can query this metadata server programmatically, from…

cloud.google.com

The metadata service is covered in the main primer article.

Authentication: Google Accounts

A Compute account is attached to an active Google and Google Apps account. This account may add new users, but those users are restricted to other active Google and Google Apps accounts. Once a Google account is linked, that user can sign-in using their account and interact with the Google Compute resources assigned to them.

Credential Files

When the Google Compute SDK, Compute’s command line tool, is used for the first time, the user must run gcloud init to authenticate (or gcloud auth login). This launches a web browser for the user to sign-in using their Google account via OAuth2. At this point, several credential files are setup in the user’s home directory:

Linux and macOS: ~/.config/gcloud/

Windows: C:\Users\USERNAME\AppData\Roaming\gcloud\

Inside the gcloud folder, the SDK creates two SQLite databases, credentials.db and access_tokens.db, and a “legacy_credentials” folder. The legacy_credentials folder contains a folder named for each Google account used with the SDK and these folders each contain a JSON file with that account’s client_id and client_secret.

There is also a ~/.gsutil/credstore2 file. The credstore2 file is not easily found in the Google Compute documentation, but is referenced in the boto library used for interacting with Compute (also Azure and AWS) via Python:

GoogleCloudPlatform/gsutil

gsutil – A command line tool for interacting with cloud storage services.

github.com

This output from gsutil config may explain the existence of this file:

CommandException: OAuth2 is the preferred authentication mechanism with the Cloud SDK.

Run “gcloud auth login” to configure authentication, unless:

– You don’t want gsutil to use OAuth2 credentials from the Cloud SDK,

but instead want to manage credentials with .boto files generated by

running “gsutil config”; in which case run “gcloud config set

pass_credentials_to_gsutil false”.

– You want to authenticate with an HMAC access key and secret, in

which case run “gsutil config -a”.

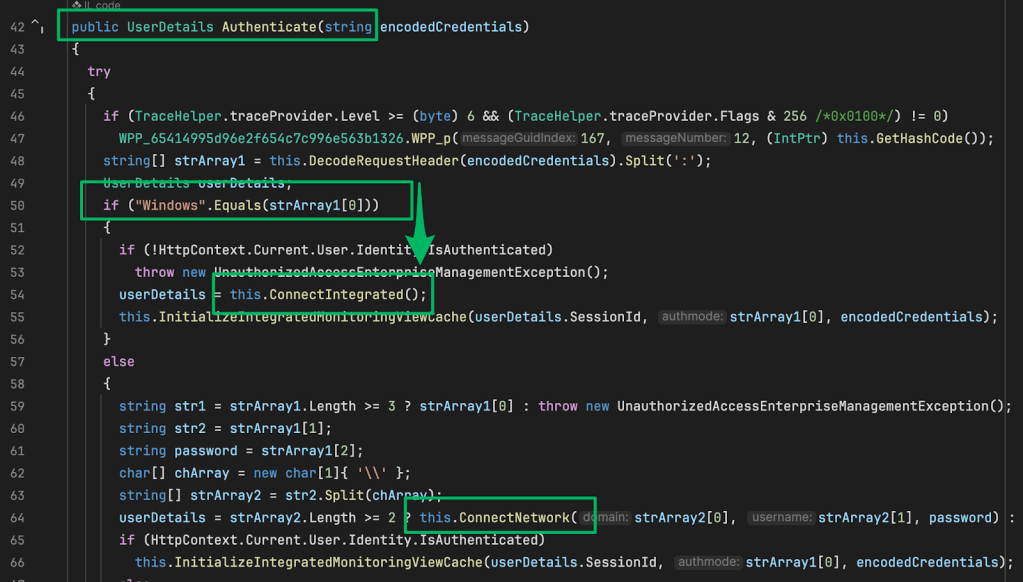

Stealing Credentials

If Compute credential files are found, access to a Google Compute account can be setup by copying the gcloud credentials.db andaccess_tokens.db database files and the full contents of the legacy_credentials directory from the home folder of a user the has logged-in with the Google Compute SDK.

Note that the folders within the legacy_credentials directory contain an adc.json file and a hidden .boto file.

Once everything has been copied into ~/.config/gcloud/, the database contents can be checked using this command to list all of the authenticated accounts:

gcloud auth list

All successfully copied accounts will be listed. Next, an active account must be specified. This is done using the following command:

gcloud config set account ACCOUNT

Finally, run a gcloud command to test authentication, like gcloud compute instances list. If authentication is successful, the command will return results. If the OAuth2 authentication has expired / been revoked or the copied data is incomplete, an error like this one will be returned:

ERROR: (gcloud.compute.instances.list) There was a problem refreshing your current auth tokens: invalid_grant: Token has been expired or revoked.

In this event, verify the copied data and/or try switching to a different account.

If it turns out the authentication was revoked or has expired, it may be possible to refresh the login with Remote Desktop access. The gcloud auth login command will generate a new OAuth2 login URL and launch the system’s browser. If the user is logged into their Google account in the browser, then the Google profile will appear in a list for selection. Selecting the appropriate Google profile will complete the Google Compute SDK login and refresh the credential files. The new credentials can then be copied.

Automating Credential Theft

As a proof of concept, this tool was created to search for and collect credential files associated with Compute, AWS, and Azure:

chrismaddalena/SharpCloud

SharpCloud — Simple C# for checking for the existence of credential files related to AWS, Microsoft Azure, and Google…

github.com

SharpCloud is a simple, basic C# console application that checks for the credential and config files associated with each cloud provider. If found, the contents of each found file is dumped for collection and potential reuse.

Command Line: Google Compute SDK

Google uses the Google Compute SDK for command line access. Using the SDK is straight forward and requires logging in using an active Google account linked to a Compute project.

Cloud SDK | Cloud SDK | Google Cloud

A collection of command line tools for the Google Cloud Platform. Includes gcloud, bq, gsutil and other important…

cloud.google.com

The SDK is installed by downloading the appropriate package for the target operating system and then installing it. Once initialized with a Google account, the command line tools are ready to be used.

Google offers command documentation here:

gcloud | Cloud SDK | Google Cloud

Edit description

cloud.google.com