Mar 9 2022 |

Revisiting Phishing Simulations

This post was written by Matt Hand and the rest of the SpecterOps team.

Overview

SpecterOps is a huge proponent of the “assumed breach” red team execution model where we begin the engagement with ceded access to simulate initial compromise. We believe that this allows us to focus our efforts on areas that create the most value for our clients — simulating what happens after initial access. Despite our strongly held belief that ceding initial access is the most effective use of resources for timeboxed engagements, we typically end up being asked to phish our way into the environment. This recurring ask led us to rethink the why and how of phishing as a component of offensive security engagements in order to understand the reasoning behind our clients’ requests and to come up with alternatives that would satisfy their needs, provide meaningful results, and make the best use of our inherently limited time.

Why We Phish

The first and most important thing to understand while revisiting our approach to phishing is why we are phishing in the first place. When we look at the catalog of available initial access techniques, we find that very few options are as appealing as phishing. For example, external system compromise, especially at very mature organizations, has become incredibly difficult. Physical site breach isn’t in the threat models for many of our clients. Achieving code execution through the compromise of web applications is exceedingly rare. This leaves us with phishing as the best, and most threat-replicative, option to gain an initial foothold in the environment. Phishing is extremely prevalent, one of the top ways that real threat actors gain access, and something that is seen by defenders on a nearly daily basis.

This fact can be coupled with an understanding of the reasoning behind the client’s request for us to achieve our own access into their network to form a more complete picture. It has been our experience that when a prospective client makes this request, they are hoping to be able to tell the story of a complete, outside-in attack chain at the end of the engagement. The people at the executive level who will be briefed on the results of our operation and secure resources for remediation or further investments are oftentimes non-technical. This means that they may not understand the spirit of the engagement fully, so adding in the “gimme” of ceded access can feel like cheating to them or dismissive of other defensive controls that they’ve put time and energy into.

So if clients want to be able to tell the story of a full attack chain and phishing is the most effective way to gain initial access as a component of that attack path, what’s the problem? It all comes back to making the most of our limited time and resources to provide the most value to our clients as possible. It is our belief that, while effective, our approach to phishing as an industry currently is not providing the best bang for the buck. Here are a few of the biggest issues that I’ve found with the current state of phishing which have presented themselves everywhere I’ve worked.

Red Teams are Bad at Phishing Simulation *ducks*

We were recently on a call with a client with whom we were actively engaged where they said they’d caught our phishing campaign. We asked how they knew it was us. We were using completely burnable infrastructure, domains which had never been used maliciously before, a brand new payload which we were using for the first time, and we’d only targeted a few dozen of their nearly 1000 employees. Without missing a beat, our point of contact said, “it smelled like a red team.” After asking him to elaborate further, we found out that the overwhelming majority of phishing emails their SOC works with on a daily basis are low sophistication, untargeted, dragnet campaigns using lame pretexts and commodity payloads and seeing something “advanced” immediately tipped them off that something was up. Simply put, we tried too hard.

This was pretty shocking, so we talked to a few friends working in other SOCs and they said the same thing. They’re usually able to pick up a campaign tied to a red team because the pretext is substantially better than what they’re actually subjected to (along with cheap VPSs and domain registrars ????). While it might sound like a humblebrag, this is actually not a good thing. If we’re not presenting a real-world simulation, we’re training defenders to be good at detecting and responding to red teams and not actual threats, which leads into our next point.

Phishing Simulations are not Threat Replicative

Thinking through the reality of today’s phishing landscape, we can pretty quickly see that phishing simulations as a component of a red team engagement is not an adequate representation of the environment in which defenders operate. We can think about this from a few different attackers’ perspectives:

- The Dragnet — A malicious actor collects a huge list of arbitrary email addresses and indiscriminately targets accounts in this list. Among the list of domains happens to be your company’s. If the attacker gains access to your environment during this campaign, then you may just be another network to try to ransom without much care for who you are or what you do.

- The Targeted Attack — In situations where someone really wants to get into your network, they probably have a reason to do so. Sinking months of time and resources into persistently targeting your user base may be a worthwhile investment for them. Building rapport over time and exploiting that trust now becomes realistic. Failing or getting caught doesn’t particularly matter because they can just keep trying over and over again until eventually, they get through.

We can see pretty quickly that a few days/weeks of phishing a scoped target list isn’t going to be representative of either case, both due to time (targeted) and scoping (dragnet). This leaves phishing simulations in a weird place, trying to be a small exposure to a persistent threat that has to work in order for the engagement to progress but doesn’t have the runway to be as replicative as necessary.

We (Generally) Already Know the Outcome

This issue is really about coming to grips with the unfortunate reality that we’re working with. Can you be phished? 100% yes. Given enough time and resources, a motivated and reasonably sophisticated threat actor will eventually gain access to your environment. If your company of 1,000 people has even a 1% click rate for generic phishing messages, that means that for every campaign you’re subjected to, there’s a chance that 10 people will click on whatever is in there. This is not a situation where we can say “well it won’t happen to us because we have X, Y, and Z” — it is an inevitability. If we already know that you can and will be successfully phished at some point, why don’t we spend time focusing on what will happen when that day comes and what we can do to limit an attacker’s ability to further their access?

We’re Trying to Measure Too Much with Too Little

When we’re looking at providing meaningful recommendations at the end of an engagement, phishing falls into an odd place where it is such a big part of the engagement (how initial access is obtained) but the suggestions are often generic and don’t provide a lot of value. How many times have you seen “continue routine security awareness training” in a report?

We believe that this stems from the fact that we’re treating phishing as a binary answer to the question of “can you be phished?” rather than truly assessing the controls that make the phish possible. If we break down all of the distinct components of a successful campaign into their measurable pieces — a believable pretext, an email which lands in users’ inboxes, a payload which detonates with the desired effects, and a fault in the detection and response pipeline — it becomes evident pretty quickly that trying to squeeze all of that into a few days of effort isn’t realistic and leads to us missing a lot of valuable insights that would allow us to better advise the organizations with which we work.

A New Approach

What we propose today is a more thoughtful approach to the way we’ve been doing things. This approach relies on collaboration between the operators and the internal security team as well as takes advantage of strategic concessions. This in turn allows the operators to more directly assess the area(s) of concern for the client and in return will provide more meaningful metrics that lead to better recommendations and outcomes.

The first thing we need to know is what the client is actually trying to measure. If they really just want a full attack chain to tell the story, then the legacy method still works. If we can help them clearly identify the questions they want answered, then we can directly assess them. Generally, when we’re talking about phishing susceptibility, we’re really lumping many questions into one. I’ve included titles to make referencing these questions easier.

- Social Engineering — What percentage of my user base is susceptible (i.e., will click on the “evil” parts of a phish, do what is asked, or engage the phisher) to phishing?

- Payload Delivery — What types of messages and attachments will successfully land in my users’ inboxes?

- Payload Detonation — What payloads will successfully detonate on my users’ systems or where can my users’ compromised credentials be leveraged?

- Response Process — Is my team able to detect and respond to a successful phish?

Each one of these questions can be assessed and answered individually with their own unique metrics.

Social Engineering

Overview

Starting first with Social Engineering, this question forms the basis on which products like Cofense’s PhishMe and tools like GoPhish are built. By routinely subjecting employees of the company to benign phishing attacks, susceptibility rates can be measured, and improvements tracked over long periods of time. If this question is already covered by the company’s existing routine security awareness training program, then contracted operators generally don’t provide much value when assessing it. However, if this is not part of the company’s ongoing training process, then getting these point-in-time metrics can provide a ton of value by highlighting where additional investments need to be made.

Execution

For the Social Engineering exercise, we’re really interested in seeing who will click on what. In an ideal scenario, this would include a number of campaigns that are different in their approach. For example, one which contains an attachment, one with a link to a site where users enter their credentials, and one that is very targeted to a handful of individuals. Because we are trying to measure susceptibility, the operators should communicate the sending infrastructure (e.g., domain) and pretexts with the client in advance. This enables the client to configure technical controls to ensure phishing emails are delivered to target users.

On the technical side, we need to measure susceptibility at each phase of the compromise (i.e., opening the email through executing the payload), so tracking user behavior is the largest hurdle. Thankfully, this has mostly been solved by existing phishing toolkits. Here’s an example of how we’d collect metrics for the campaigns.

- Emails Opened — Tracking pixel which calls back to a server with a unique identifier for each recipient

- Attachments Opened — Benign executable which calls back to a server with the username, hostname, and timestamp of the user who executed it

- Links Clicked — Track unique site visitors using the web server access logs along with a unique identifier per recipient

- Credentials Entered — Correlate the Links Clicked metric with entered data for both real and fake credentials

- Phish Reports — Collect the total number of reports from the client’s designated reporting mailbox at the end of the engagement

Required Coordination

- Client adds the operator’s domain(s) or sender address(es) to their inbound mail allow list

- Addition of the benign executable to the allow list used by defensive products

Example Metrics

- Percentage of users who opened the email

- Percentage of users who clicked the link

- Percentage of users who opened the attachment

- Percentage of users who clicked the link and entered credentials

- Percentage of users who reported the phish

- Time to first report

Payload Delivery

Overview

This is where things really start to get interesting. The focus of this assessment is to see what we can get to land in front of a user when they open their email client. This means assessing things like inbound spam and reputation scoring and filtering, the types of files which are allowed as attachments, external email tagging, safe link replacement.

This is somewhat complementary to the Social Engineering engagement type as we’ll remove the explicit inbound allow of the operators’ domains to see what will hit a user’s inbox in more of a real-world situation. To accomplish this, the client provides a test email account that simulates a normal employee and ideally provides the operators with access to the account to limit engagement overhead and bottlenecks.

Execution

The Payload Delivery exercise can be a little tricky to assess fully simply because there are so many variables that go into determining what will ultimately hit a user’s inbox. Since we need to effectively fuzz some of these parameters, the assistance of tooling will also become important.

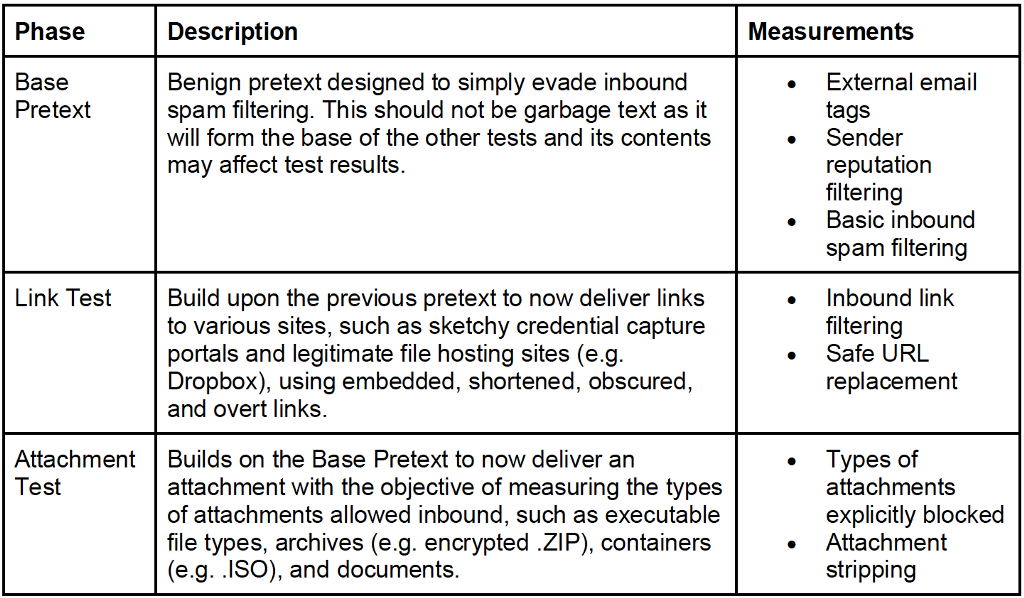

Our thoughts on how to accomplish this is to ask the client to provide a test email account that simulates a normal employee as the victim or recipient of all tests. Additionally, the client would ideally provide the operators with direct access to the account to self-observe test results and limit engagement overhead. The operators then create a base pretext from which they modify attributes to assess what will and will not be blocked. This may look like:

This exercise requires the operators to send numerous emails, so the use of automation would greatly expedite this process. I’m not aware of any public tooling that exists specifically for this purpose at this time, but a simple email builder with string replacement would likely fit just fine. The primary things that to replace would be the link in the email body and the attachments. By building out a dictionary of links or link types and a collection of attachments of many different extensions, this can be almost completely automated.

Required Coordination

- Client provides a test email account which simulates a standard employee

- (Optional) Client provides the operator with access to the test account

Example Metrics

- Suspicious message contents allowed (e.g., embedded links)

- Executable and archive file types allowed in

- External email tagging enabled

- Sender domain reputation filtering

Payload Detonation

Overview

The Payload Detonation exercise is definitely the most challenging to execute as it is technically complex and difficult to evaluate success or failure criteria. Its objective is to see what types of things can happen to a user and their workstation should they fall victim to a phishing campaign. In today’s operating environment this generally means testing two things — payload execution on the host and credential capture and reuse. There are a few things being measured here, but they’re really centered around endpoint and external service protection. This may include host-based security products such as EDR and multi-factor authentication (MFA) implementations on external services.

To aid in assessing this metric, a high level of collaboration is required between the operators and the client. Since we’re not interested in measuring phishing susceptibility or payload delivery, the client should provide a test user and workstation representative of a standard employee and configure security controls to explicitly allow inbound emails from the malicious sender address. In addition, the client should provide a “witting victim” (e.g., someone who will click the bad stuff in the email no matter what) to avoid the need to social engineer a user for each new payload test. This allows the operators to more directly assess what happens when things go “boom” without having physical control over the victim workstation.

Execution

Because we’re assessing two entirely different areas — payload execution and credential capture — we need to take two different approaches.

Beginning with payload execution, the operators develop an arsenal of different executable payloads ranging from the simulation of commodity malware to more bespoke, obscured payloads. For example, this could span from an HTA containing staged Cobalt Strike Beacon shellcode all the way to encrypted, environmentally keyed, compressed persistence droppers. It is really up to the operator to determine the level of sophistication required to effectively assess controls, but in general we recommend starting with the least advanced and working your way up to more sophisticated payloads to try to find minimum required effort to land a payload on the host. The operator will send these attachments to the supplied testing email address where the witting victim will attempt to execute each payload in the worst of situations, meaning accepting all prompts and ignoring all warnings. Additionally, the operator may opt to assess drive-by download susceptibility where a link is sent to the test account which, when clicked, will download the payload via the browser. For each of the payloads, the operator will measure if execution completed successfully and will collect notes about any security controls on the host that could have interfered with the compromise, such as download failures, SmartScreen, and antivirus notifications.

For credential capture and reuse, things become quite a bit easier, and the phishing aspect can be ignored almost entirely. What we’re really after is measuring what a malicious actor can do if a user accidentally submits their credentials to an attacker-controlled site. To perform this test, the client can simply provision a testing account that simulates a real employee, including application access and MFA enrollment. The client then provides the operator with the username and password to simulate a successful credential capture. The operators use these credentials to attempt to authenticate to external services such as Microsoft365, Okta, the corporate VPN portal, and other web applications used by employees of the company. Throughout this process, the operators evaluate which services effectively leverage MFA and highlight any gaps in their coverage and may also simulate what a MFA bypass could look like, such as in the case of successful credential harvesting where the user supplies a token value. Another interesting thing to test during this exercise is what happens if the user declines the suspicious MFA prompt, which bleeds somewhat into the Detection and Response exercise.

Required Coordination

- Client adds the operator’s domain(s) to their allow list

- Client provides a test email account which simulates a standard employee

- Client will provision a test workstation which is representative of the standard corporate image

- Client will click all attachments and links sent to the test account on a provisioned workstation

Example Metrics

- Host-based security response to different payload types

- Host-based controls which prevented execution

- Implementation of MFA

- Suspicious authentication logging/alerting

Response Process

Overview

The final exercise type, and our team’s personal favorite, is one in which we assess the company’s incident response process. Think of this exercise as starting an assumed breach engagement where access is ceded via a simulated phish. This requires a little bit more finesse than other engagement types as it needs to be as close to reality as possible. This means that ideally the client identifies an employee (e.g., simulated victim) who will voluntarily detonate the phishing payload on their workstation, accept any MFA prompts, and serve as the initial beachhead from which the operation is conducted.

The objective is not to immediately get detected on initial access, but rather begin the operation as normal and wait until detection occurs organically as a result of activity later in the attack chain. Then, as the SOC/CIRT spin up their investigation, we assess their ability to trace the initial compromise back to the victim, at which point we interrupt the kill chain and debrief. In this engagement type, the question we’re trying to answer here and be further deconstructed into four components:

- Did the action trigger an alert?

- Did the analyst triage the alert as a true positive?

- Were the response actions executed as expected?

- Did the response actions actually stop the attacker?

Execution

There isn’t much here that is different from the standard operating procedures of most offensive teams other than the fact that we 100% know that the recipient of our phishing campaign will do whatever is asked of them in order to secure us access. However, this does not mean that we can send a garbage pretext and a bad payload. Because the initial email and payload need to ideally remain undetected and will not be explicitly allowed and will hopefully become part of an investigation, a well thought out pretext and payload which meets the required sophistication should be used. Additionally, the operators may opt to include the target recipient in a larger group which will receive the campaign rather than individually targeting that specific user to simulate a less-target adversary.

After receiving code execution or credentials from the volunteer, the engagement should progress as it would if access was gained organically. Since our goal is to simulate a real compromise, this means installing persistence to avoid having to ask the volunteer to rerun the payload.

Required Coordination

- Client supplies a “witting victim” who will open the phishing email and execute the payload or

- Client supplies valid domain credentials and will accept any MFA prompts

Example Metrics

- Time to detect the compromise

- Time to respond to the compromise

- Time to fully eradicate the compromise

Other Considerations

While these distinct exercise types can help us more directly address the concerns of our clients, they need to be tuned to fit your team’s execution model. This means socializing them with your team, integrating into the pipeline if those responsible for contract scoping, identifying or building out tooling to meet your operational needs, and integration of the metrics into your reports in a way that makes the best use of the data. For example, can we cede access normally for the engagement while also having operators work on the phishing side of the engagement to save on time?

Conclusion

By revisiting how we’ve always done phishing simulations, we’ve identified ways in which we believe that we can better assess, train, and advise our clients. We hope that by sharing our approaches to these problems that we can help other like-minded organizations make the shift and find ways to implement them in their own workflows.

This is not going to be an overnight shift of the way in which phishing is thought about, scoped, sold or performed. We believe that we can continue making incremental improvements to help teams make better use of their timeboxed engagements and provide more value to clients.

Despite as hard as we may try to preach the gospel of assumed breach, phishing as a component of a full-scope red team is here to stay. Being thoughtful in our approach to assessing an organization’s risk so that we can provide meaningful metrics and guidance will allow us to provide more value through our work. Who knows, maybe we can make phishing so difficult that it becomes a thing of the past. ????

Revisiting Phishing Simulations was originally published in Posts By SpecterOps Team Members on Medium, where people are continuing the conversation by highlighting and responding to this story.