TL;DR Mythic Eventing automates repetitive tasks during red team operations (RTO). This blog documents the eventing system and provides a collection of starter YAML scripts for reconnaissance and other common tasks. These can be modified to execute specific commands or Beacon object files (BOFs) based on your needs. The repository for these scripts are located here: https://github.com/MythicMeta/Pantheon

Introduction

During my internship at SpecterOps, I spent time supporting red team and penetration test engagements using Mythic C2. In some cases, when we would get a new callback from a host, we ran the same reconnaissance commands repeatedly. While doing that, it became clear that the Mythic eventing functionality could automate some of these routine tasks. Another place for this automation is when we pivoted through dozens of servers looking for credential material for a specific user that we targeted through an attack path found in BloodHound. Running these commands manually takes up a lot of time, which is a concern when operating in time-constrained operations.

The goal is to speed up the initial reconnaissance phase so operators can focus on more complex objectives like actually exploiting what the recon finds. This project addresses the gap in eventing documentation by providing practical examples with YAML scripts for common operational tasks and documenting how to pass commands with specific arguments, as well different triggers for the scripts to run, whether that be manual or on a time basis with something like a cron job.

The official Mythic eventing documentation is located here, along with a blog from the developer, Cody Thomas:

Methodology and Framework

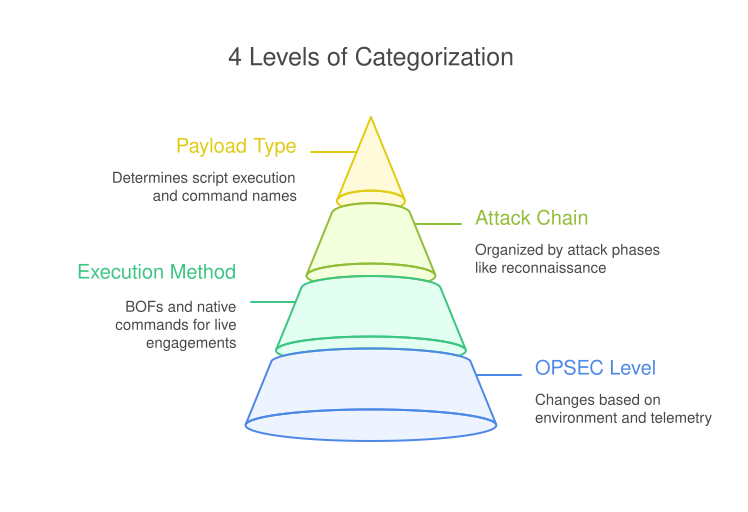

The repository uses an OPSEC categorization system to help operators get a basic understanding of the noise level of each script. Regardless of the OPSEC categorization, before using any of these YAMLs, operators should manually review them to understand what’s happening on the target system. Here’s how the categorization works:

OPSEC LEVEL

The overall OPSEC level will change based on the environment because of installed endpoint detection and response (EDR) solutions and what telemetry is getting generated on the hosts. This rating gives a general idea of how much noise each script might generate.

BOF ran or Natively ran

Most pre-built scripts avoid actions like spawning new processes or dropping files to disk. The focus is on OPSEC-conscious commands suitable for live engagements. With that in mind, most commands are run through various BOFs and native commands within the agents.

Attack chain category

Scripts are organized by the attack chain phases: reconnaissance, persistence, lateral movement, etc. Since recon involves many repetitive checks, automating these frees up the operators to execute more of their attack path within a timely manner. Most current scripts focus on reconnaissance, with some covering identification of persistence and lateral movement opportunities.

Payload Type

The payload type determines a large part of the script’s execution for multiple reasons. The main one being the name of the actual commands. For the Pantheon repository, all of the starter scripts are dedicated to the Apollo agent. The next reason is what host the payload is designed for. For example, a payload with Windows recon commands will not run properly on a macOS device.

OPSEC Considerations

- Always manually review the YAML script before execution or deployment. The scripts run a variety of commands targeting different areas of the intended operating system.

- Test each script extensively in a lab environment or test-bed. This will allow you to see how the scripts act while also allowing you to collect telemetry to understand the amount of noise it makes in a production environment.

- BOFs can crash Beacons or cause unexpected results, test their stability before executing on a live Beacon. Some BOFs are outdated in the way they execute; for example, memory constraints with certain agents can cause some BOFs to hang.

- Like previously mentioned, OPSEC levels can vary by target environment. You are more likely to find a bank in the S&P 500 that has a dedicated security operations center (SOC) with optimized and configured security information and event management (SIEM) and EDR solutions compared to a mom-and-pop shop just running Windows Defender on their endpoints. This will change the amount of telemetry the defenders can see and use to find you.

Sources of BOFs and Agents

This project builds on work from the Mythic C2 and agent developers and the broader BOF development community. Many object files come from various repositories, and several operators shared their playbooks and techniques throughout the summer to help me gain operational context. This documentation and resource is intended to give back to that same community.

Special thanks to:

- Mythic by Cody Thomas

- Situational Awareness BOF by TrustedSec

- list-wam-accounts by Matt Creel

- C2-Tool-Collection by Outflank B.V.

- BOF_Collection forked from Steve Borosh

Walkthrough: Persistence Assessment Automation

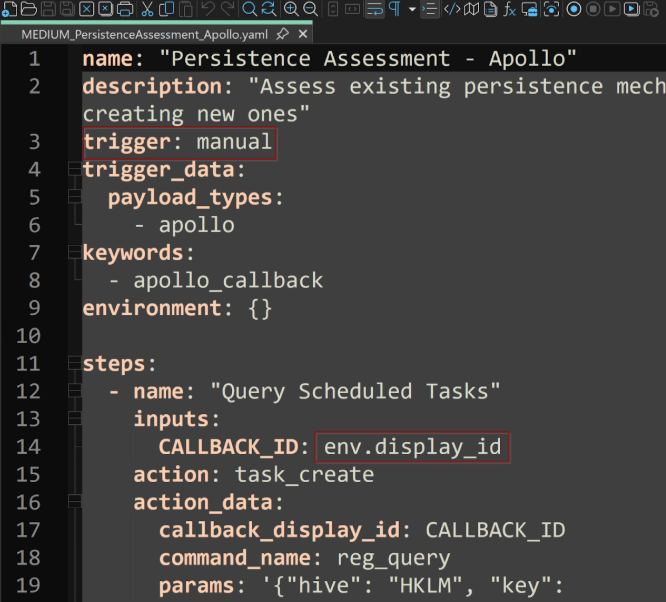

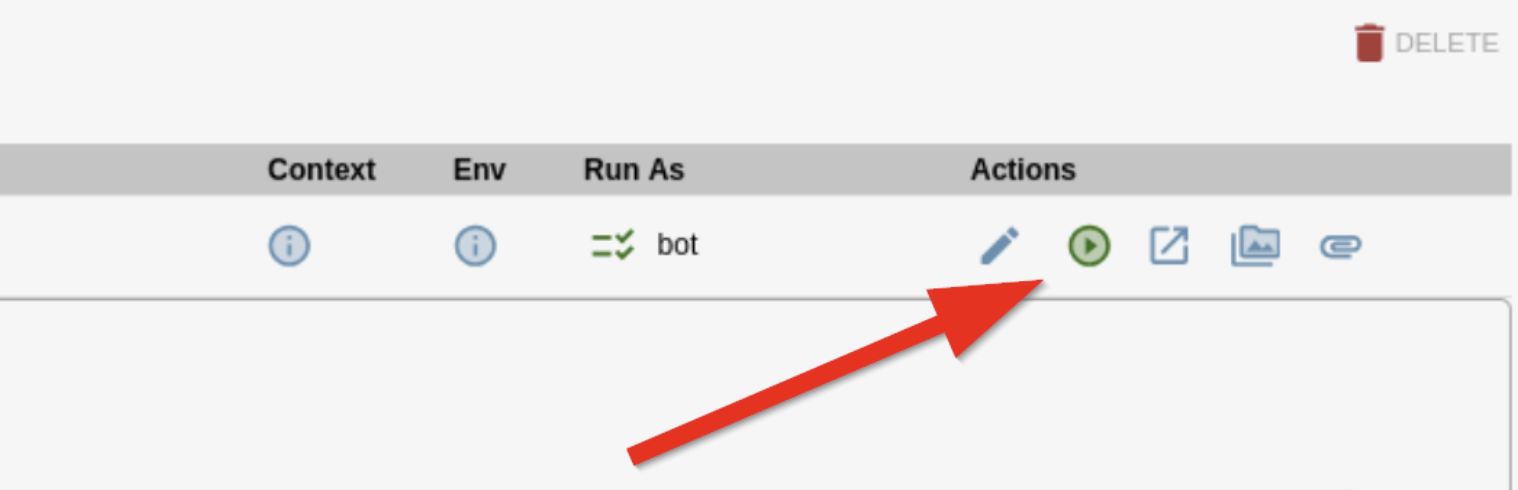

This script is an example of automating the location of potential persistence mechanisms with the Apollo agent and my test host. There are many ways for triggering eventing workflows. The main two triggers I use are callback_new and manual. The callback_new trigger automatically executes a workflow whenever a new Beacon establishes contact with the teamserver, while the manual trigger lets you execute workflows on-demand by clicking the green play button for a specific script. The callback_new trigger is ideal for automating initial reconnaissance or setup commands that should run on every new compromised host, whereas the manual trigger provides precise control over which scripts execute on which targets. But for this type of script, I will choose the manual trigger as this script enumerates a lot of registry keys and I don’t want these BOFs to run on every single callback I get.

- The first thing I will need to do is grab the MEDIUM_PersistenceAssessment_Apollo.yaml file from the Pantheon GitHub repo.

- I will then change the env.display_id, which is an input value keyword that allows us fetch the named value from the environment, to the callback ID of the beacon we want to execute this on. The callback ID is the number that correlates the beacon number that is running on a host, you can find this information on the “Active Callbacks” pane within Mythic under the Interact column. If I were to run this for every callback, I would keep that display id the way it was. This can be easily replaced for each step using match and replace. Then keep the trigger as manual.

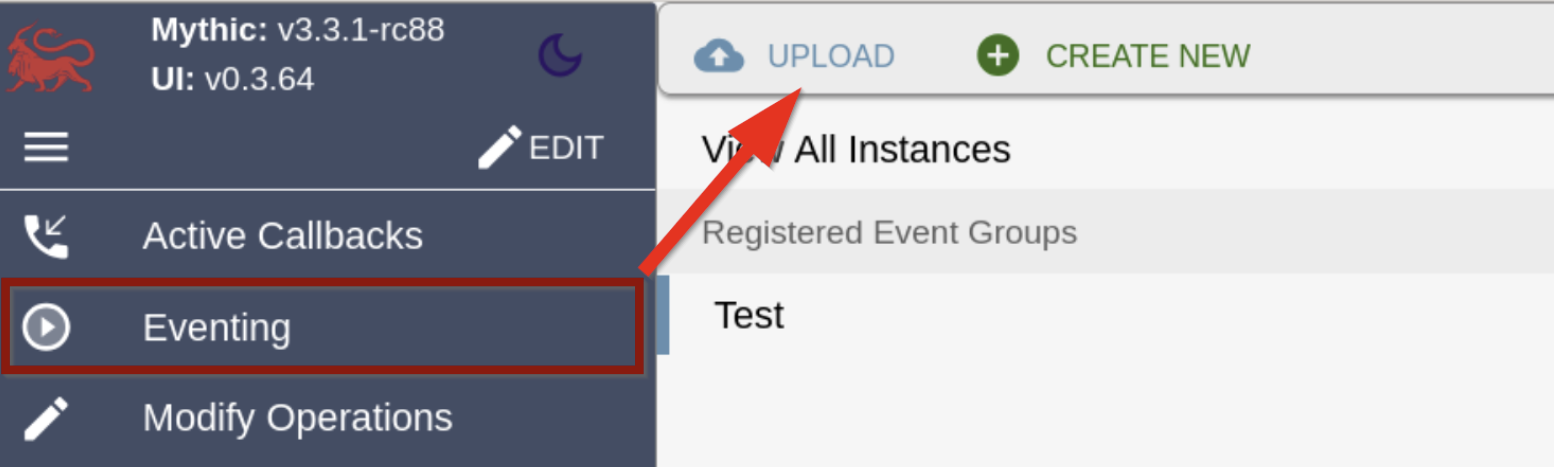

- We will upload this via the “Eventing” tab within Mythic.

- Once uploaded, we will click the green play button and watch the recon run for us. This will automate BOF and command execution.

Here is the result of a few of the executed commands:

Troubleshooting Steps

Debugging these scripts took some trial and error (figuring out argument passing, whether commands were actually executing, whether if the script was broken or the BOF was outdated, etc.). Mythic provides several debugging options through both the eventing pane and command pane where you can view parameters and error messages.

One useful debugging trick: Test all BOFs in the command pane using the execute_coff command directly, as this will allow you to understand how you pass arguments in the eventing scripts. This also lets you pass the arguments as integers, strings, or wide strings without the command line parsing getting in the way.

Argument Passing

Argument passing, both within Mythic and the BOFs, was probably the biggest pain point, but there are multiple ways to do pass them depending on the command and agent. Here’s a breakdown to save you some debugging time:

Native agent commands (JSON)

For example, in Apollo, there is the reg_query command and we want to pass a certain hive and key as the target we will write:

command_name: reg_query

params: '{"hive": "HKLM", "key": "SYSTEM\\CurrentControlSet\\Services\\Netlogon\\Parameters"}'

Executing BOFs through execute_coff

For executing BOFs through the execute_coff command, we will pass the COFF and params like this with the arguments for the BOF in the coff_arguments section:

command_name: execute_coff

params: '{

"coff_name": "get_password_policy.x64.o",

"function_name": "go",

"coff_arguments": [["wchar", "localhost"]]

}'For the coff_arguments, you will generally be able to find what the BOF accepts by viewing the entry.c file in the source code. For example, the constant here is a “wide character” which is passed as a wide string within Mythic:

Parameter Type Mapping

There are several data type options that can get passed to the BOFs, here is a table of their definition and use cases:

Display Arguments

Once these arguments from the eventing script get parsed in Mythic, you should see cleaner looking commands within the display. If something was wrong in the way you passed them, you will see that error there as well. If you want to view all of the commands and parameters that were passed, hover over the command pane, locate the gear icon, and click “View All Parameters and Timestamps”. This is especially helpful when creating new eventing scripts, as you can copy the parameters from that location.

Lessons Learned and Future Work

- Building red team infrastructure is way more complex than just using it operationally. A ton of work goes into making tools both stealthy and stable.

- Developing these automation scripts definitely gave me more appreciation for the engineering side of offensive security.

- Collaboration from the community and peers is necessary. Without either, this project would not be possible.

- Automation magnifies both success and failures. If one thing goes wrong during the script or BOF execution, the rest breaks.

I encourage people to contribute to the Pantheon repository, which will create more eventing scripts for everyone. It is also a good way to get familiar with Mythic C2, as you will need to walk through multiple areas of the framework.

Conclusion

I wanted to give back to the community that has inspired and taught me everything I know by creating this documentation and resource. My hope for this project is that the community will continue to build up a solid collection of Mythic automation scripts for everyone to use in engagements, training, and labs.

Contact Information:

Linkedin – https://www.linkedin.com/in/gavin-kramer

Social Media – @atomiczsec