ShareHound: An OpenGraph Collector for Network Shares

TL;DR: ShareHound is an OpenGraph collector for BloodHound CE and BloodHound Enterprise that maps network shares, permissions, and paths at scale helping identify attack paths to network shares automatically.

Introduction

In many enterprise environments, network shares can become prime staging targets for ransomware or lateral movement. Attackers who gain access to even a low-privileged user may discover a writable or readable share that unlocks sensitive data or additional escalation opportunities. To make matters worse, administrators rarely have a centralized, consolidated view of share-level access across all hosts in the domain. We built ShareHound (https://github.com/p0dalirius/sharehound) to fill exactly that gap. By crawling all network shares (with throttling, connection reuse, and a domain-specific filtering language), ShareHound builds an OpenGraph map of network shares exposure in a domain.

A First Approach to This Problem

Creating a collector for all the files, folders, and permissions of network shares of a domain may initially seem like an easy task. The first naïve idea to tackle this problem is to map all the computers in the domain and then crawl all their shares. This requires:

- An LDAP query to the domain to get all the computer objects and their dNSHostName attribute

- A DNS query to resolve each of the found computers

- Connecting to each reachable host to list its shares

- Connecting to each individual share and crawling it with a breadth first search (BFS)

Performing all these actions sequentially without multithreading is an obvious waste of time, so we will add this first!

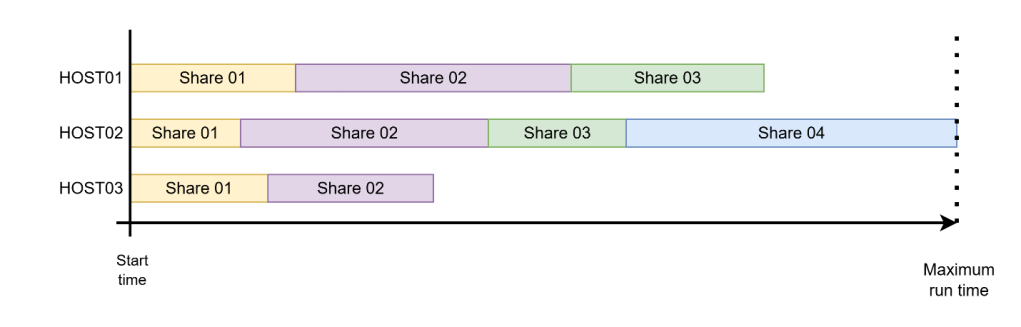

Adding Multithreading

We can add multithreading to the collector to enable parallelism and lower the overall runtime. Instead of crawling one share at a time in a serial fashion, threads allow us to keep both the client and the network busier and reduce idle time spent waiting on I/O. There are two main strategies for introducing multithreading in this context. The first is to dedicate one thread per host, meaning that all shares on a given machine are processed sequentially inside a single worker. This model is simple to implement and avoids excessive contention against a single server, but it underutilizes available parallelism when a host exposes multiple shares.

The second strategy is to dedicate one thread per share, where every share discovered across all machines becomes an independent task in the thread pool. This maximizes concurrency and tends to yield faster overall execution because shares are usually crawled independently and spend much of their time waiting on network responses. However, it requires a safeguard, a per-host concurrency limit to prevent overwhelming individual servers. This per-host concurrency limit is especially important in the case of a host with hundreds of shares.

In ShareHound, we treat shares as the unit of work for parallelism, with per-host throttling and connection pooling. With these optimizations, we are ready to tackle our next problem: managing the scaling of ShareHound in vast environments.

Further Improvements

Even with efficient parallelism, we are still limited by the filters we can use on so many network shares at once. The most basic check we can implement in a share crawling tool is the depth parameter. This depth parameter will limit the maximum depth of exploration in either Depth First Search (DFS) or BFS modes. Further optimization would need to introduce a way to allow or deny exploration on a case-by-case basis.

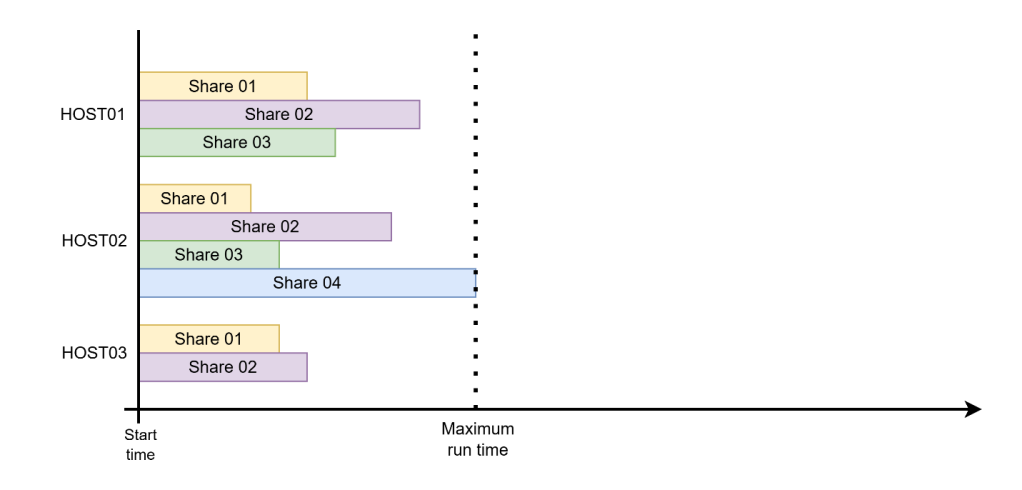

Creating a Domain Specific Language

To address a wide range of possible use cases, we need to have a language of sorts dedicated to network shares. This is the goal of the ShareQL domain specific language (https://github.com/p0dalirius/shareql) we released alongside ShareHound. This language works similarly to the rules of a firewall. Each rule is evaluated sequentially from the first one and when one rule matches, evaluation is stopped.

Here is an example of a ruleset aiming to skip known shares:

As a small bonus to end users, we also provide a syntax highlighting extension for the ShareQL language in VSCode: https://github.com/p0dalirius/shareql-vscode-ext

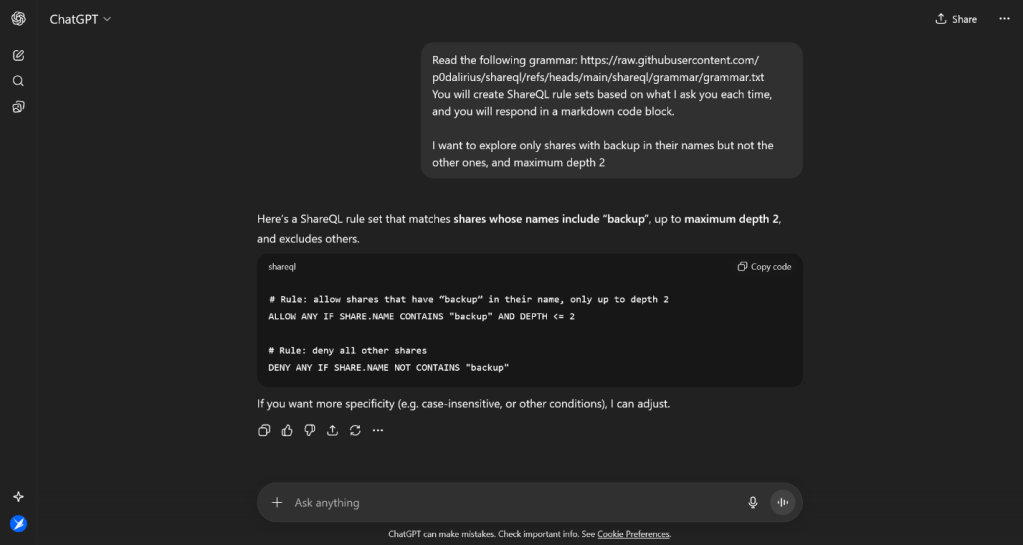

In the era of Large Language Models (LLMs), this new domain specific language would also allow users to ask an LLM in natural language to generate these ShareQL rules for their use cases!

Here is an example prompt for this:

Read the following grammar: https://raw.githubusercontent.com/p0dalirius/shareql/refs/heads/main/shareql/grammar/grammar.txt. You will create ShareQL rule sets based on what I ask you each time, and you will respond in a markdown code block with only the ShareQL rule set.

—

I want to explore only shares with backup in their names but not the other ones, and maximum depth 2

Use Cases of ShareHound

The most common use-case of ShareHound is to leverage it to find the network shares that ransomware would target. These shares are accessible by low privileged users with Write or Full Control permissions.

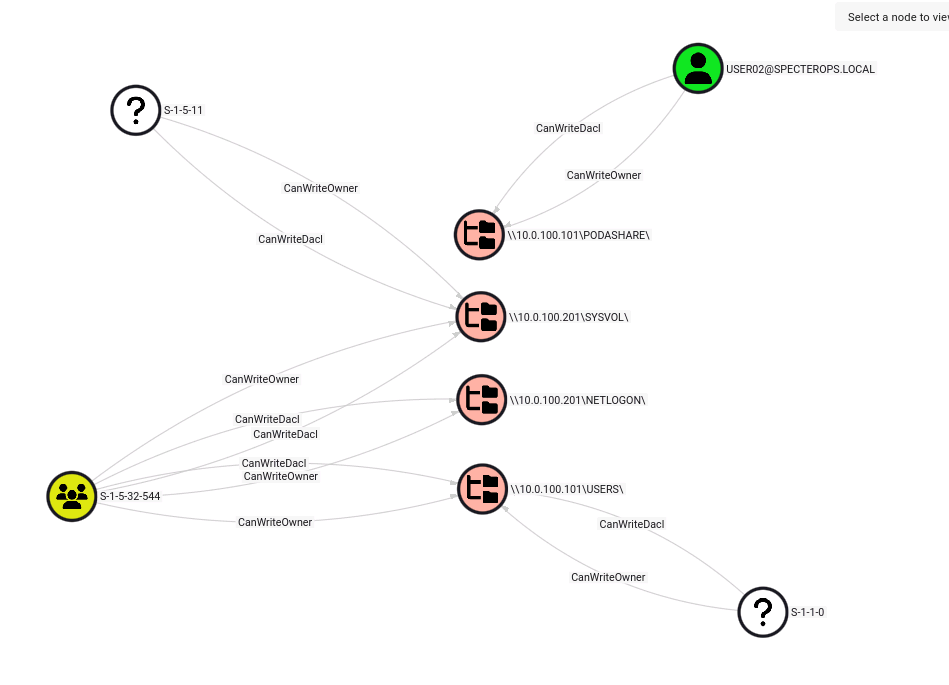

Finding Principals with Write Access to a Network Share

To find principals with Write access to a network share, we need to look for the WRITE_DAC, WRITE_OWNER, DS_WRITE_PROPERTY, and DS_WRITE_EXTENDED_PROPERTY rights. We can write this simple cypher query to achieve this using the edges CanWriteDacl, CanWriteOwner, CanDsWriteProperty, and CanDsWriteExtendedProperties:

MATCH x=(p)-[r:CanWriteDacl|CanWriteOwner|CanDsWriteProperty| CanDsWriteExtendedProperties]->(s:NetworkShareSMB) RETURN x

Running this query will result in this type of graph:

Here we see my user having Write access on the PODASHARE.

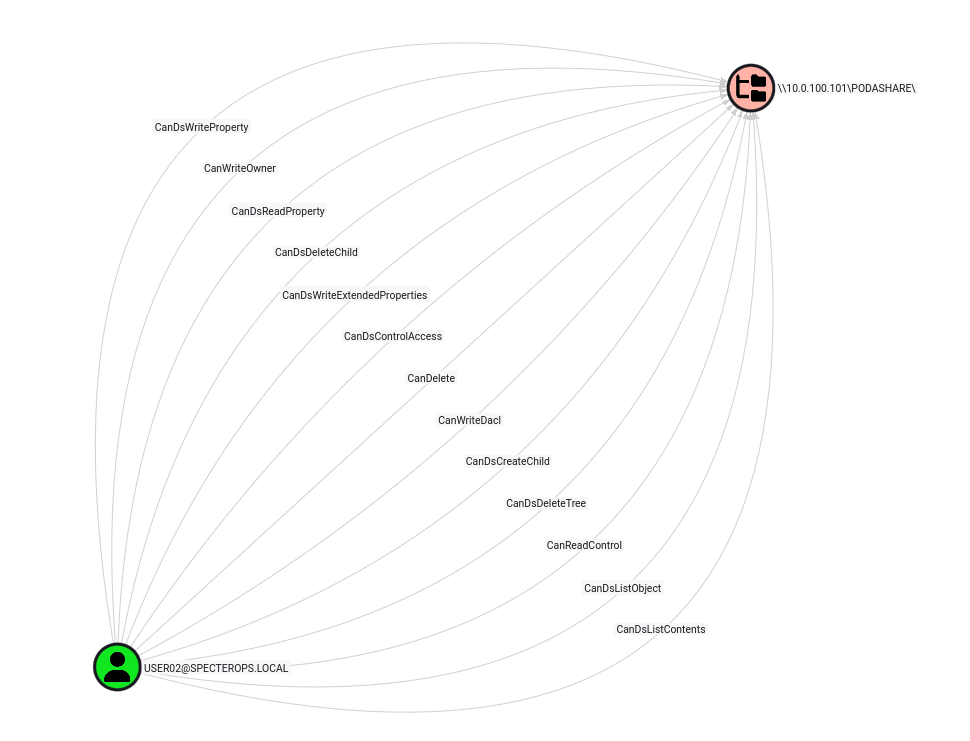

Finding Principals with Full Control on Network Shares

Full Control is a set of rights containing all rights except the Generic rights. Therefore, we can create a Cypher query to find principals having FULL_CONTROL on network shares like this:

MATCH (p:Principal)-[r]->(s:NetworkShareSMB) WHERE (p)-[:CanDelete]->(s) AND (p)-[:CanDsControlAccess]->(s) AND (p)-[:CanDsCreateChild]->(s) AND (p)-[:CanDsDeleteChild]->(s) AND (p)-[:CanDsDeleteTree]->(s) AND (p)-[:CanDsListContents]->(s) AND (p)-[:CanDsListObject]->(s) AND (p)-[:CanDsReadProperty]->(s) AND (p)-[:CanDsWriteExtendedProperties]->(s) AND (p)-[:CanDsWriteProperty]->(s) AND (p)-[:CanReadControl]->(s) AND (p)-[:CanWriteDacl]->(s) AND (p)-[:CanWriteOwner]->(s) RETURN p,r,s

And, in result, we get this Onion looking graph on the PODASHARE where my user has FULL_CONTROL rights:

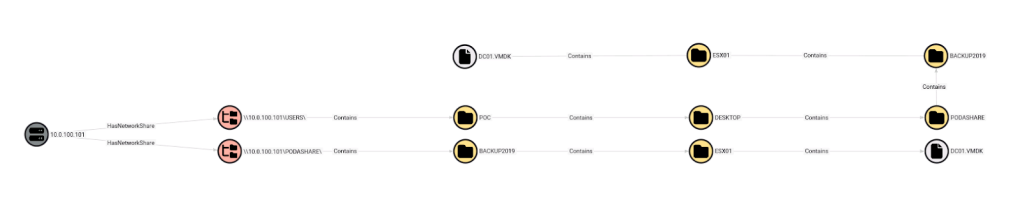

Finding a VMDK File of a Domain Controller in a Share with Read Access

One very interesting use case where ShareHound can help your assessments is finding virtual disks stored in network shares where an owned low-privileged user has access. A few years ago, I did an assessment where I faced a hardened domain with a very strong network tiering in place. At some point, I started to deep dive into shares to look for juicy secrets and I found a forgotten share that all Authenticated Users could access and contained an old VMDK of a secondary domain controller (DC). In this case, ShareHound would have helped me find this automatically.

If you use ShareHound, you can do this by filtering on the file extension like so:

MATCH p=(h:NetworkShareHost)-[:HasNetworkShare]->(s:NetworkShareSMB)-[:Contains*0..]->(f:File) WHERE toLower(f.extension) = toLower(".vmdk") RETURN p

This gives us the following graph:

Demonstration of ShareHound

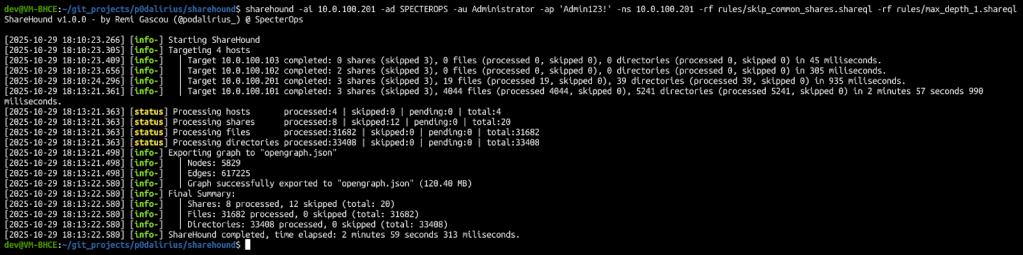

Using ShareHound is very easy; you just need to specify the credentials with -ad/-au/-ap, and the rules with -r/-rf arguments.

sharehound -ai "10.0.100.201" -au "user" -ap "Test123!" -ns "10.0.100.201" -rf "rules/skip_common_shares.shareql" -rf "rules/max_depth_2.shareql"

After this, you will end up with a JSON file containing the OpenGraph data to ingest into BloodHound.

Wrapping Up

Mapping network shares is hard. With the ShareQL language, ShareHound provides a good base for users to customize their searches in network shares and build custom rulesets for specific scenarios.

Finally, keep in mind that even with these optimizations, crawling all the shares of an enterprise domain to their full depth will still be very long. For time-sensitive assessments, we recommend limiting the maximum exploration depth to 1 or 2 if you are not filtering on specific shares to crawl.