Oct 5 2022 |

Prioritization of the Detection Engineering Backlog

Written by Joshua Prager and Emily Leidy

Introduction

Strategically maturing a detection engineering function requires us to divide the overall function into smaller discrete problems. One such seemingly innocuous area of detection engineering is the technique backlog (a.k.a. the detection engineering backlog, attack technique backlog, or detection backlog).

The concept of incorporating a backlog into the detection engineering function as a medium for receiving and storing attack techniques for detection generation is not a novel concept for most organizations. However, very few security organizations consider how best to prioritize these attack techniques found within this backlog. By combining input-based prioritization and the Center for Threat Informed Defense’s Top Ten Technique Calculator, detection engineers can confidently select target techniques with some sense of direction.

The Importance of the Detection Engineering Backlog

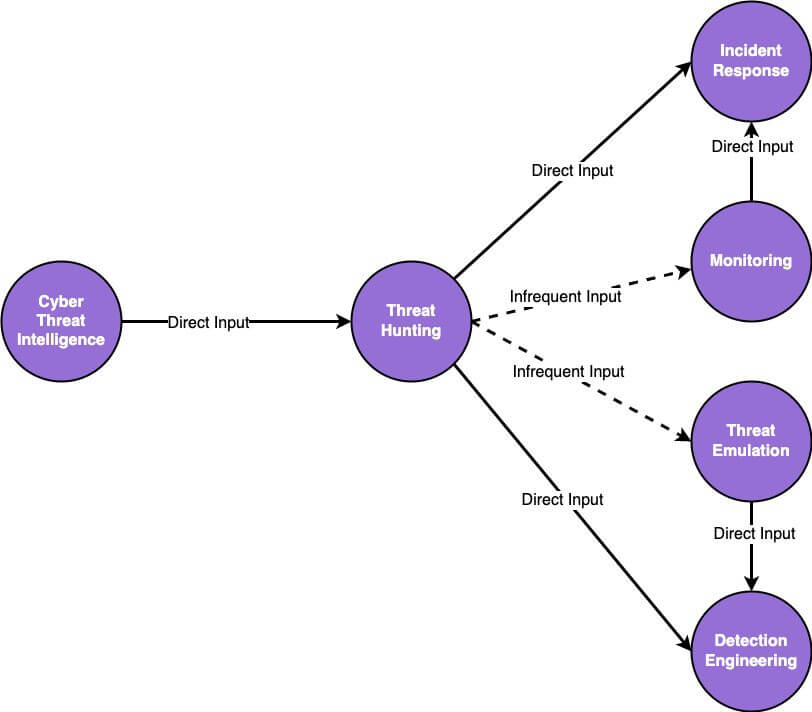

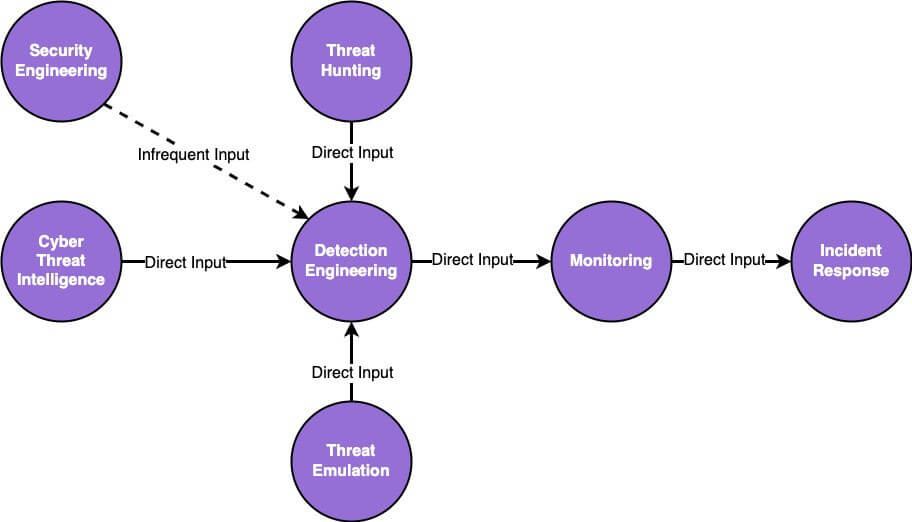

The detection engineering backlog is the starting point from which a mature detection engineering function should begin. This backlog is essentially an input chokepoint for other functions within a security organization’s detection and response program to provide techniques for detection generation. These inputs may come from other functions where the detection engineering function is a stakeholder in the other functions’ research and output. An example of the type of function mentioned is cyber threat intelligence (CTI). Another function that can act as an input into the detection engineering backlog is the threat hunting function. This function can provide hypotheses, research, and queries to the detection engineering backlog, servicing a critical need for cross-functional collaboration.

Most functions within a security organization’s detection and response program may leverage backlogs within its development process. However, many of these functions do not require input to drive their functional operation. The detection engineering function, specifically, requires the direction of cross-functional input to avoid making ad-hoc decisions for resource utilization. In other words, detection engineering must be steered by the detection and response program or resources could be devoted in the wrong direction at the wrong time.

An example, we often utilize when describing the differences between the threat hunting function and the detection engineering function; is to highlight the expected operation of each function as a mature process. The threat hunting function requires very little input from any other function. Most threat hunting functions will be a stakeholder to CTI, at a minimum. However, the concept of proactive hunting leverages the assumption that there are no external stimuli needed to develop a hypothesis, research, and develop proactive hunting queries.

In contrast, the detection engineering function requires the external stimuli of other detection and response inputs to accurately prioritize detection generation. Inputs into the detection engineering backlog can be of multiple types such as a gap in a defensive posture, a historical look-back query from threat hunting, or a research-centric goal of generating detections along a specific technique type. Regardless of the input type, the detection engineering function requires the inputs of other functions to know which detections to generate.

Input Examples for the Backlog

Consulting SpecterOps’ clients have afforded our detection services team the benefit of exposure to a wide array of strategic methods for capturing the required input for detection engineering. For each of our clients, SpecterOps avoids putting an emphasis on a particular tool or solution for providing input opportunities to the detection and response program. Instead, we provide the minimum criteria of what is needed for mature detection engineering functions to receive quality inputs as well as define the methodology by which to organize and prioritize this backlog.

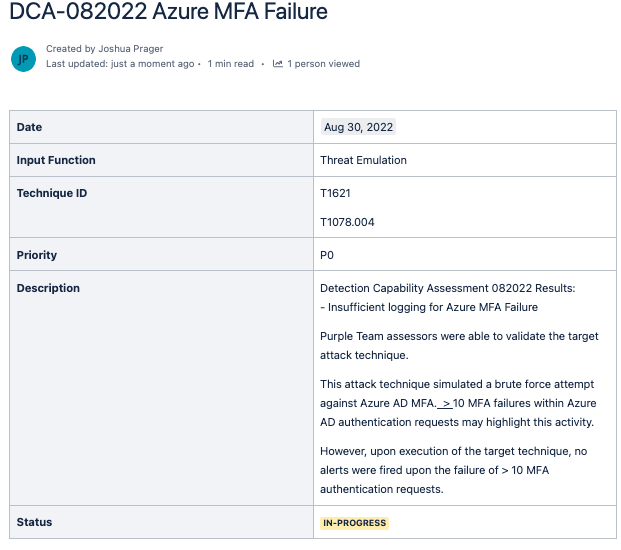

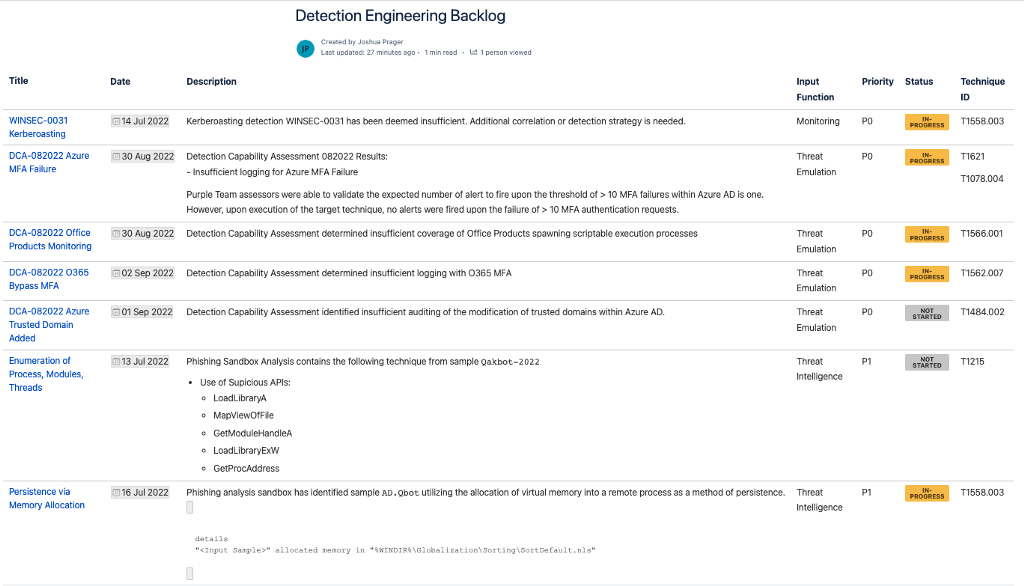

Most of our clients utilize a ticketing platform of some kind to offer a portal for the other functions of detection and response to interact with detection engineering. These ticketing platforms, regardless of the actual software, should inquire about the same details as those that provide the input. The minimum requirements for use of a ticketing system as an input into the detection backlog are as follows:

- Method of tagging the function that inputs the request (we will explain why this is important in a bit).

- An area where a detailed description of the technique can be described with relative links such as MITRE ATT&CK Technique IDs.

- Method of tracking the status of the ticket requested in the backlog.

Most of the above criteria are common-sense requirements for any ticketing platform, however, there are quite a few organizations that rely upon methods such as email or chat platforms as an official method of requesting new detections. When consulting organizations tell us, “Direct messages or email is the approved method of requesting the generation of a detection;” in general, this creates concerns for us around the following two areas:

- The detection engineers are only generating the one detection requested and not discovering additional related techniques or methods of execution in their research.

- This is often due to a lack of research during the detection generation.

2. The detection engineering backlog does not exist or it is utilized ad-hoc.

- This can be due to a lack of input or an input that is not operational/useful for detection engineering.

Ideally, we want a large list of techniques and methods of execution within the backlog from which to develop detections. Additionally, you may have noticed that we did not list the need for attaching documents or reports to the list of minimum requirements. This detail ties into the problem of the input from other functions being non-operational. Many CTI functions will provide input to the detection engineering backlog in the form of an intelligence report or a spreadsheet of Indicators-of-Compromise (IOC)s. Mature cross-functional communication between CTI and detection engineering should involve the necessary metadata to accomplish the goal of generating a detection. For example, CTI can provide a list of all known methods of Kerberoasting via links to blogs and open-source proofs-of-concept (POC)s, instead of an attached intelligence report PDF of 15 high-level explanations of Tactics, Techniques, and Procedures (TTP)s. The former of the above example provides useful information that detection engineers can use to gauge the completeness of technique coverage, and the latter provides very little actionable information for a detection engineer.

- Note: If the CTI function provides reports that include information for a very specific method of execution found within an intelligence report, then the CTI function must specify the exact location within the intelligence report that contains the vital information.

How to Prioritize the Detection Engineering Backlog

Detection engineering teams that have cross-functional communication providing inputs into their detection backlog, generally, select target techniques to research sequentially. This method sometimes assumes that the backlog is prioritized already; however, the backlog is simply listed via the creation time stamp.

We at SpecterOps have aimed to solve this problem for multiple clients, and what we have settled on is a priority based on input, with priority 0 as the highest priority and priority 4 as the lowest priority. When asked to explain this methodology to clients, we usually provide the following analogy.

Priority 0

When living within a house, or in a community, which of these is the greater concern? A stranger knocking on our shut and locked front door or our front porch window that is open without any screen or glass protection? If you thought, “The window, because the stranger at the front door can just come through it,” then you would be correct. Though the stranger knocking at our door is an attention-grabbing concern, the front door is locked and preventing, securing, and detecting that which it is designed to. However, the open window is cause for immediate concern because the window is a known vulnerability or gap in our ability to prevent, secure, or detect.

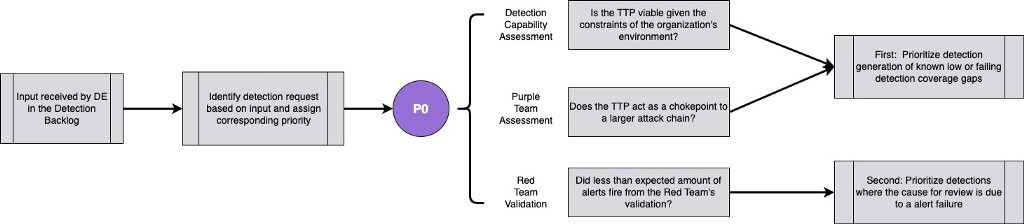

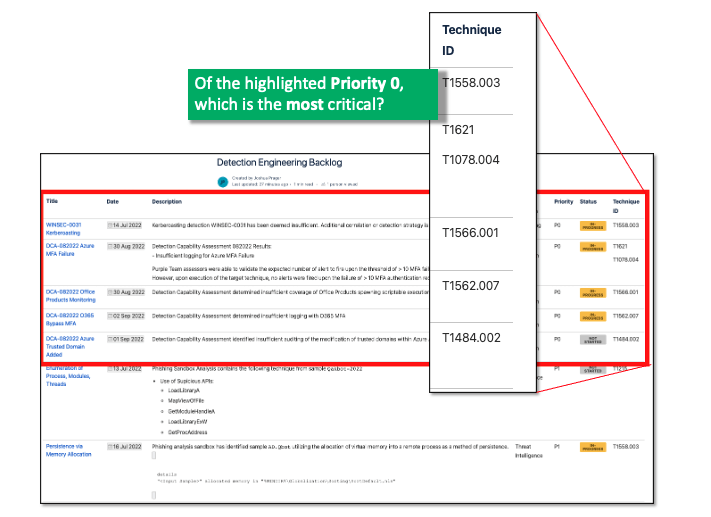

The same can be applied to detection engineering where the input comes from gap analysis, purple team assessments, and defensive capability assessments. The highest priority of generating net new detections is to focus on known target techniques for which the organization has the least amount of coverage.

- Note: For the sake of clarity, defensive capability assessments should gauge the level of completeness for a particular technique’s detection coverage. This priority structure is applied to detection engineering with the assumption that behavior-based techniques are the input to the detection engineering program. This priority structure is not going to consider things like new software vulnerabilities unless there is a Log4J type of scenario where the input is a software vulnerability related to multiple cyber network exploitation campaigns.

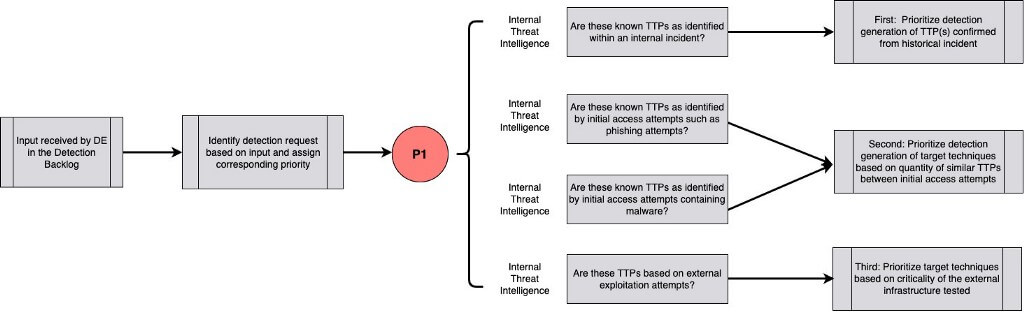

Priority 1

Following up with the house scenario, which of these two is the greater concern? A stranger knocking on the shut and locked front door or a community post about intruders knocking on your neighbors’ doors and attempting to barge in? Though the community post is definitely a frightening scenario, it doesn’t directly affect us at this time. Our immediate concern is the stranger knocking on our front door. Luckily, our front door’s lock is stopping any possible intrusion by preventing and detecting the possible intruder. This method of identifying the techniques used against our organization is a form of internal intelligence. Examples of internal intelligence are techniques derived from identified phishing attempts, incidents, and honeynets.

Internal intelligence can provide a wealth of opportunities to justify the prioritization of one group of TTPs over another. An example of mature organizations is those that aim to automate this input by way of forwarding prevented phishing attempt samples to cloud-hosted sandboxes. Next, the samples are cataloged and TTPs are disseminated to the detection engineering backlog via quantitative analysis of the TTPs. The detection engineers prioritize these TTPs provided from internal intelligence of prevented phishing attempts to design detections around the TTPs utilized against our organization in the case that the prevention fails and the execution of the phishing attempt is successful.

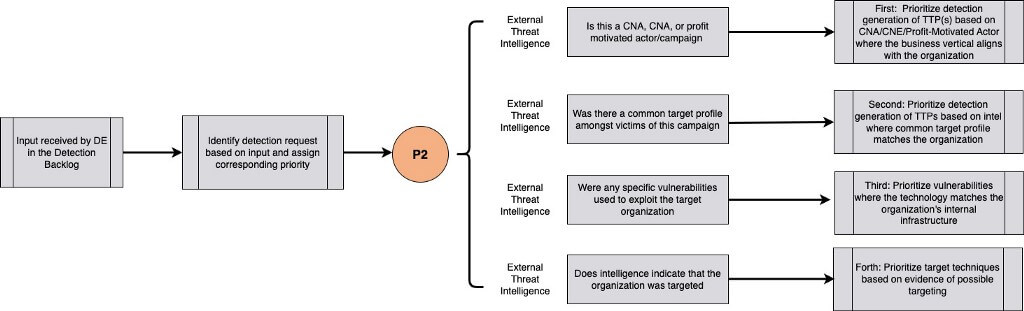

Priority 2

Continuing with our analogy of prioritization, we see the issue of the community post stating intruders are knocking on doors and barging in. This scenario is not ideal, however, we have not directly been attacked with this issue yet. As homeowners, we do have similarities with our neighbors and we should heed their warnings, but we should not prioritize this information over the current concerns that are at our doorstep (the open window and the stranger at our door). This part of the analogy represents external intelligence, and the techniques selected from this type of intelligence must be held against a stricter standard before acting as an input into the detection backlog.

Aligning with another organization’s business vertical is not enough to filter out that which may not pertain to our organization and that which does not belong in our backlog. Instead, TTPs from this input should match pre-defined criteria that are unique to each organization. The attributes of the organization’s threat landscape make for a great starting point for filters to dismiss unusable techniques and procedures requested from external intelligence. By prioritizing the detection backlog with internal intelligence before external intelligence, detection engineering can more accurately assign resources first to threats that are actively testing the defenses.

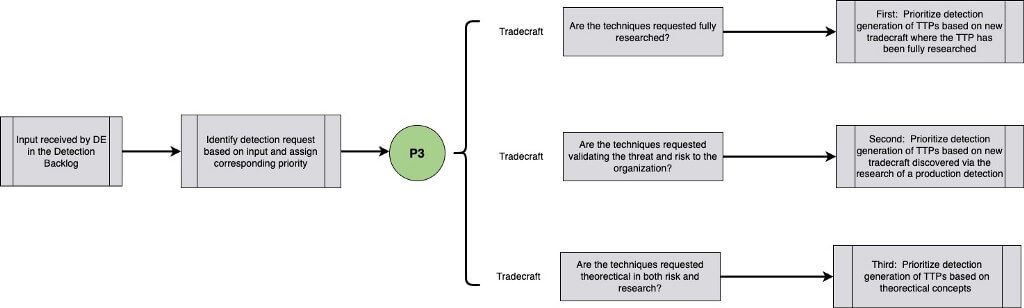

Priority 3

Let us progress a bit in our analogy. In the same community post above, there is a sub-comment where another person states, “Sometimes the intruders knock on the door, but other times they break the glass window on the front door to unlock the deadbolt.” Here, the analogy is representing new tradecraft discovered while generating a detection for a similar technique. Jared Atkinson explains that by aiming abstraction at “maximizing the representation of the possible variations” we may discover procedurally unique instances that are sub-technical synonyms [1]. In this case, the methods by which the intruders are gaining access to the homes differ; however they are sub-technical synonyms.

As defenders research and validate each hypothesis, procedurally unique instances of tradecraft can be discovered for which control may not yet be implemented. When these newly discovered forms of tradecraft are an input into the detection engineer backlog, they can often be somewhat theoretical and further testing and validation are often needed to determine if the new tradecraft poses a legitimate threat to the organization’s environment.

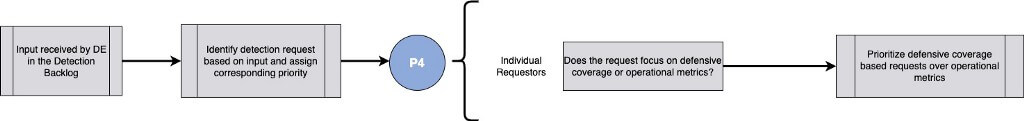

Priority 4

Finalizing our analogy, we received a phone notification from our local police department that there has been a severe 5% increase in break-ins in our area of the city. The final part of the analogy represents the generation of metric-based queries and a threshold of alerts for non-threat detection-based concerns.

Operational metrics and key performance indicators are desired across the purview of detection and response. The requests for this type of alert often make their way to the detection backlog due to the expertise in query development and data aggregation that most detection engineers have. These metrics are focused on situational awareness and provide very little operational impact to Defense in Depth, thus they should be held at the lowest priority.

Input-Based Prioritization Flow

Below is a flow chart that SpecterOps has developed in an attempt to visualize this methodology based on input into the detection backlog. The flow chart provides questions that should enable the detection engineers to approve or disapprove additions to the backlog based on the context. This flow chart is a generalized starting point, and organizations that utilize this methodology should be prepared to operationalize this knowledge by clipping it on the unique structure of their organization.

Limitations of the Input-Based Priority Methodology

There are several important considerations when implementing this methodology into your organization.

The process of determining the prioritization is subjective and may contain overlap. For example, detection engineering may receive an external intelligence report that identifies a critical TTP for which your organization is vulnerable. In this scenario, the original input (external intelligence) would indicate a level of priority 2, but the information contained in the report would be a level of priority 0. If this pertinent information is known upon prioritization; always default to the higher-priority level.

Detection prioritization requires industry and organizational context, which aids the prioritization lead in minimizing errors. These errors could lead to unidentified and un-remediated vulnerabilities sitting in the backlog. Especially, if the input is from a less mature function and does not contain needed operational information. The input may take a less experienced engineer more resources to analyze the input and prioritize correctly. Regardless of who is prioritizing, visibility bias should be considered. When the prioritizer has researched a particular high-priority attack, other unknown or unfamiliar critical inputs may be incorrectly demoted.

Finally, as mentioned before, non-operational input from other less mature functions may make this process difficult or time-consuming. Feedback loops should be implemented to streamline this process and reduce the amount of time spent dissecting the input.

Sub-Priority for Micro-Control

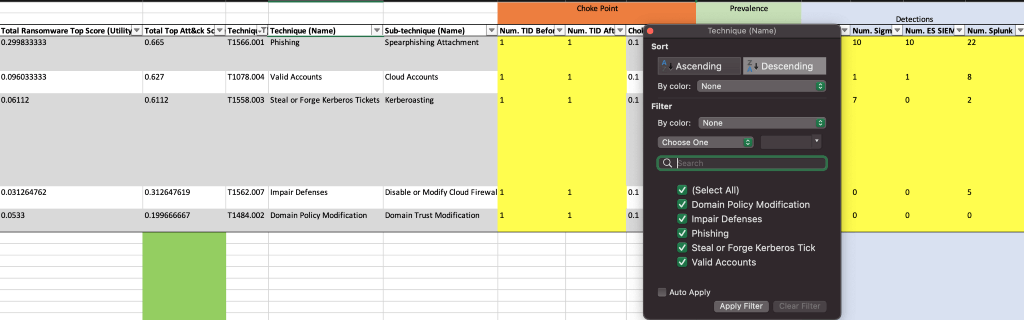

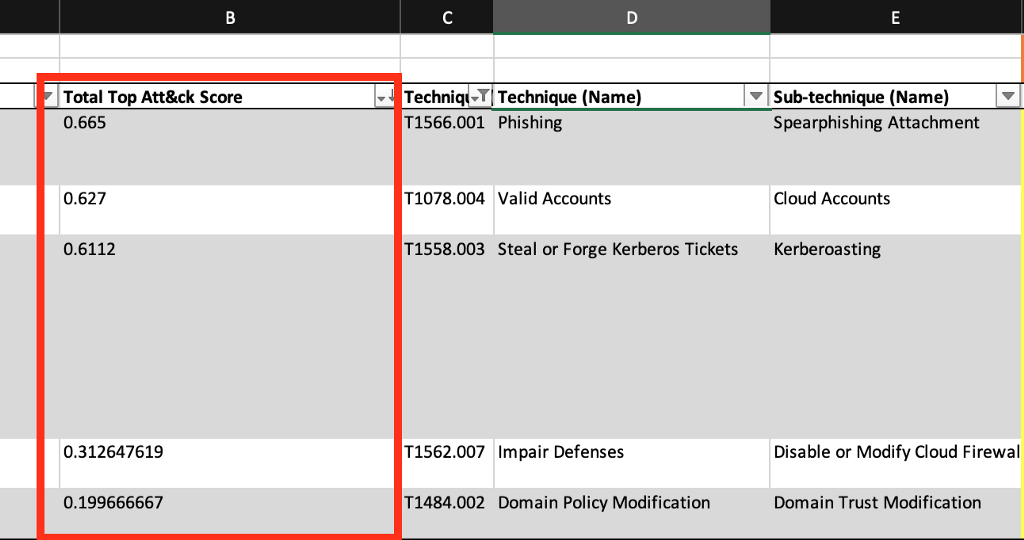

For some organizations the above methodology is sufficient as their teams are somewhat small and their inputs into the backlog are in a manageable state; however, for other organizations, the above methodology is a good starting point but may still leave those wondering how to drill down even further to have a sense of micro-control in the prioritization structure. For that, we recommend combining the Input-Based Priority structure above with the Center for Threat Informed Defense’s (The Center) Top 10 Technique Calculator to prioritize the target techniques for each of the Input-Based Priority structures [2].

The Top 10 Technique Calculator has a spreadsheet version found on GitHub that represents the backend of the web-based version [3]. This spreadsheet can be tuned and customized to match the techniques within the detection backlog per priority area. The user can then further customize this spreadsheet to represent a high-level example of coverage for specific data sources. Based on The Center’s methodology, the techniques selected, the coverage for data sources, and specific prevention controls; the calculator will format a list of the top ten most critical techniques.

For example, if the detection engineering function has 15 Priority 0 techniques within the detection engineering backlog, we can utilize the Top 10 Calculator to prioritize that list of 15 detection requests to select the most critical for detection generation, first.

Methodology Behind the Prioritization Calculator

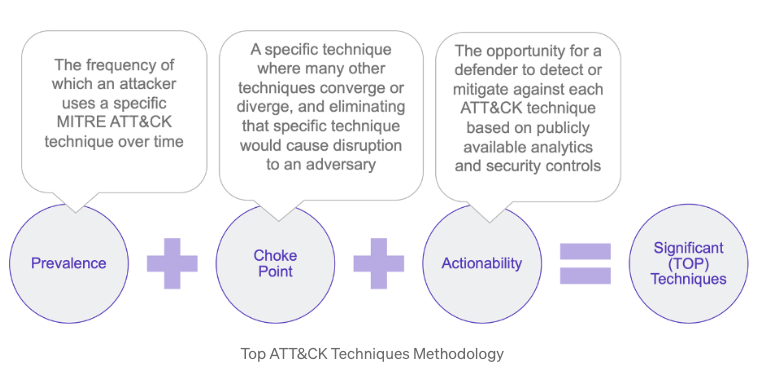

The center’s methodology for scoring the 500 techniques and sub-techniques found within MITRE’s knowledge base is derived from combining prevalence, chokepoints, and actionability [4]. To gain deeper insight into the methodology, The Center recently released a blog focused on the methodology and the actionability of the Top 10 Technique Calculator [5].

To summarize, The Center has collected metrics on the prevalence of an attack technique as it relates to adversaries and its frequency of use over historical evidence. By determining how prevalent an attack technique is found within intelligence reports related to specific adversaries, The Center can grade techniques in a way that highlights which techniques have the “highest frequency” of use.

The Center defines chokepoints as the convergence of different techniques to one specific technique were preventing the execution of that technique would inhibit or degrade the ability of the adversary to continue the attack chain. The Center grades this chokepoint based on the mitigations that the user has selected to mitigate this chokepoint, and thus degrade the adversary’s ability to execute the attack chain.

- At SpecterOps, we define chokepoints a bit differently. As we often consult detection engineers and threat hunters, SpecterOps defensive consultants tend to focus on the atomic technique and attempt to determine common denominators across a variety of methods and procedures to execute the atomic technique. The definitions for The Center and SpecterOps’ is the same; we are just focused on two different levels of hierarchy.

Finally, The Center utilizes metrics to determine the actionability of a targeted technique. By quantitatively identifying the number of publicly available methods that a defender can use to mitigate or detect the target technique, an empirical weight is then attributed to the technique. The combined metrics are then utilized to grade the target technique with a score of priority.

Conclusion

The detection engineering backlog is a vital starting point for every detection engineering function. By providing an area of input into the detection engineering backlog, cross-functional efficiency can enhance the capability of the detection engineering function.

The prioritization methods provided are a combination of strategic guidance from SpecterOps and the use of The Center’s Top Ten Techniques project. Utilizing these two methods can enhance the prioritization structure of your organization’s detection engineering backlog, however, these methods are not perfect. MITRE’s knowledge base of techniques was never designed to be empirically scored [6].

These methods combined are not a bolt-on method for prioritization and there are limitations and logic gaps with both; however, they provide a stable platform from which to begin prioritizing the detection engineering backlog first and generate more confidence in selecting the most critical of attack techniques.

References

[1] On Detection: Tactical to Functional — Part 7: Synonyms, Jared Atkinson

[2] Top Attack Techniques Calculator, Center for Threat Informed Defense

[3] Top Attack Technique Calculator Spreadsheet, Center for Threat Informed Defense

[4] Methodology, Center for Threat Informed Defense

[5] Top Attack Technique Calculator Web-version, Center for Threat Informed Defense

[6] Where to Begin, Prioritizing ATT&CK Techniques, Mike Cunningham , Alexia Crumpton, Jon Baker, Ingrid Skoog

Prioritization of the Detection Engineering Backlog was originally published in Posts By SpecterOps Team Members on Medium, where people are continuing the conversation by highlighting and responding to this story.